In the previous article we examined the hidden complexity of nested modules and the ripple effect, and in doing so we increasingly realized what unpleasant consequences may arise from this in operational use and lifecycle management. One can open the floodgates to such problems by making beginner mistakes - above all the mistake of stuffing several or even all Terraform modules into a single Git repository. However, with a bit of seniority and clean planning, such problems can be minimized from the outset.

In this part, let us take a look at how one can deal with such dependencies in practice without the pain getting out of hand.

Practical solution approaches for module version tracking

The prerequisite here is this golden rule:

- When using the free, open-source version of Terraform, every Terraform module ALWAYS gets its own Git repository and is ALWAYS versioned individually via tags.

- With Terraform Enterprise, the internal module registry is used. This allows version constraints as we know them from provider blocks, that is, restrictions using operators such as "=", "<=", ">=", "~>".

Why would you want that?

Let us consider a real-world example from the OCI world. Imagine a base module whose latest update would break the functionality of dependent modules:

# Base Module: base/oci-compute v1.2.0

variable "freeform_tags" {

type = map(string)

default = {}

description = "Freeform tags for cost allocation"

# NEW in v1.2.0: Mandatory CostCenter Tag

validation {

condition = can(var.freeform_tags["CostCenter"])

error_message = "CostCenter tag is required for all compute instances."

}

}

resource "oci_core_instance" "this" {

for_each = var.instances

display_name = each.value.name

compartment_id = var.compartment_id

freeform_tags = var.freeform_tags

# ... etc.

}

However, the service module web-application v1.3.4 was developed before this breaking change:

# Service Module: services/web-application v1.3.4

module "compute_instances" {

source = "git::https://gitlab.ict.technology/modules//base/oci-compute"

instances = {

web1 = { name = "web-server-1" }

web2 = { name = "web-server-2" }

}

compartment_id = var.compartment_id

# freeform_tags missing- Breaking Change!

}

If you do it like this, then it will blow up, as described in the previous part 6a of this article series.

Strategy 1 (Terraform): Explicit pinning of service and base module versions

Base modules are the modules that use a provider and manage resources. They always have their own individual repository and are always versioned.

This also applies to service modules. Service modules only pull in base modules, they do not implement resources. When pulling in base modules, service modules should explicitly pin their version. Only then can transitive upgrades take place in a controlled manner.

It works similarly with root modules. These pull in service modules and pin their versions exactly.

So let us once again consider our example of a service module from the previous section:

# Service-Module: services/web-application v1.3.5

module "compute_instances" {

source = "git::https://gitlab.ict.technology/modules//base/oci-compute?ref=v1.1.0"

instances = {

web1 = { name = "web-server-1" }

web2 = { name = "web-server-2" }

}

compartment_id = var.compartment_id

# freeform_tags fehlt - Breaking Change!

}

Do you see the difference? Instead of

source = "git::https://gitlab.ict.technology/modules//base/oci-compute"

we now pin the exact version of the base module:

source = "git::https://gitlab.ict.technology/modules//base/oci-compute?ref=v1.1.0"

And this way, the change in the base module does not hurt anyone in ongoing operations.

What can still cause pain, however, is when in a larger service module or a root module a module call occurs multiple times and different versions are then pulled in. That will not work well. You cannot avoid the decision of which version of a module you want to pull in.

Because unfortunately, duplicate or inconsistent pinning within a root module (same base modules in different versions) is not automatically detected by Terraform. Terraform only validates the resolution of individual source specifications, not the global consistency across multiple calls.

Here I provide you with check-module-dependencies.sh, a script that you are welcome to use as inspiration and within a non-commercial context. Due to its length of almost 200 lines I am not showing the script itself here, but I am giving you a link to the Git repository: https://github.com/ICT-technology/check-module-dependencies/

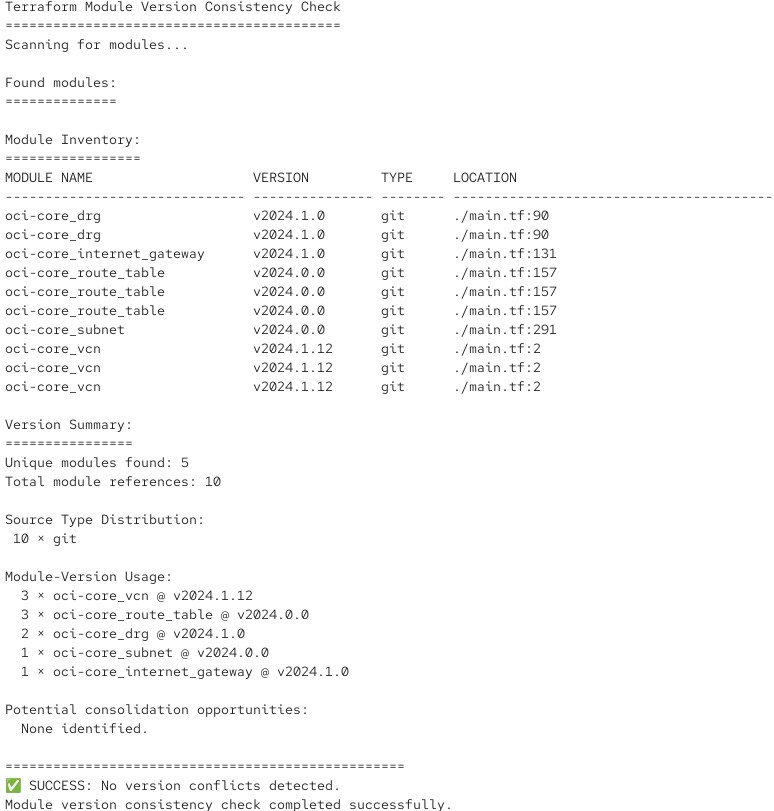

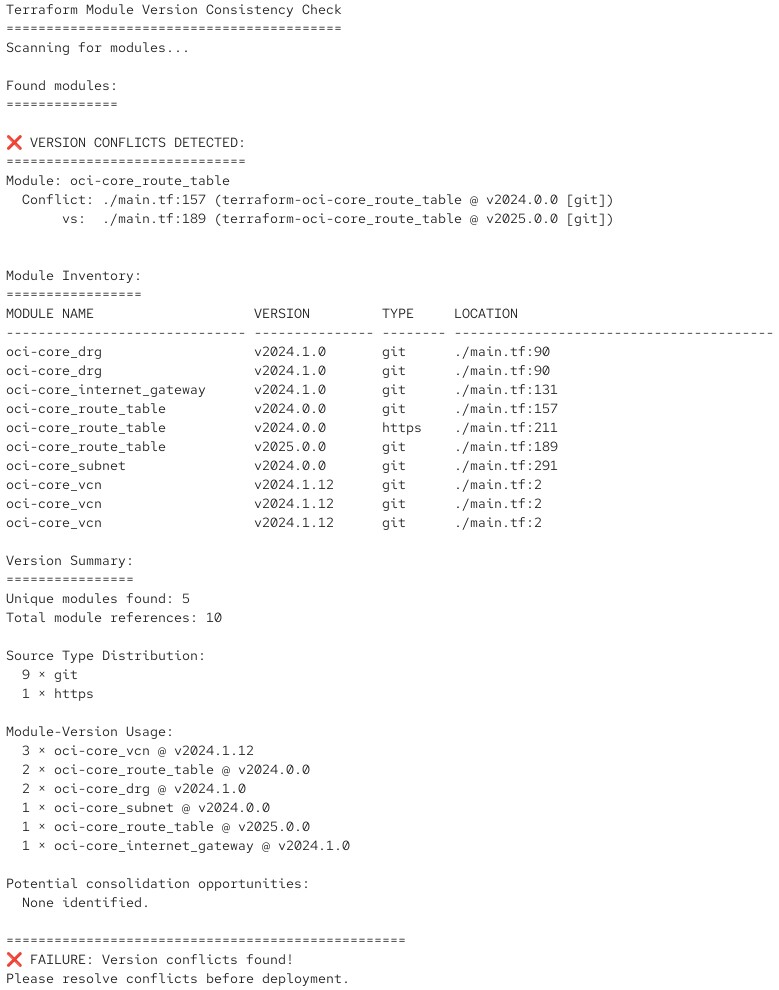

The script analyzes a root or service module and checks the version numbers of the modules pulled in. If everything works well, it produces output like this:

And if it detects that version conflicts would occur, it outputs an appropriate error message with a return code:

You can integrate such a script into your CI/CD pipeline of the test environment and thus automate the check.

Strategy 2 makes it easier.

Strategy 2 (Terraform Enterprise): Semantic Versioning with ranges

With Terraform Enterprise it becomes simple (and significantly more professional). There you should use the integrated Private Module Registry for true semantic versioning, just as you already do with provider versioning, only now as part of a module call:

# Terraform Enterprise on-prem and HCP Terraform only

module "web_service" { source = "registry.ict.technology/ict-technology/web-application/oci" version = "~> 1.1.0" # Permits 1.1.0, 1.1.1, 1.1.2 ... 1.1.x, but NOT 1.0.x or 1.2.x environment = "production" }

This is how it looks in practice

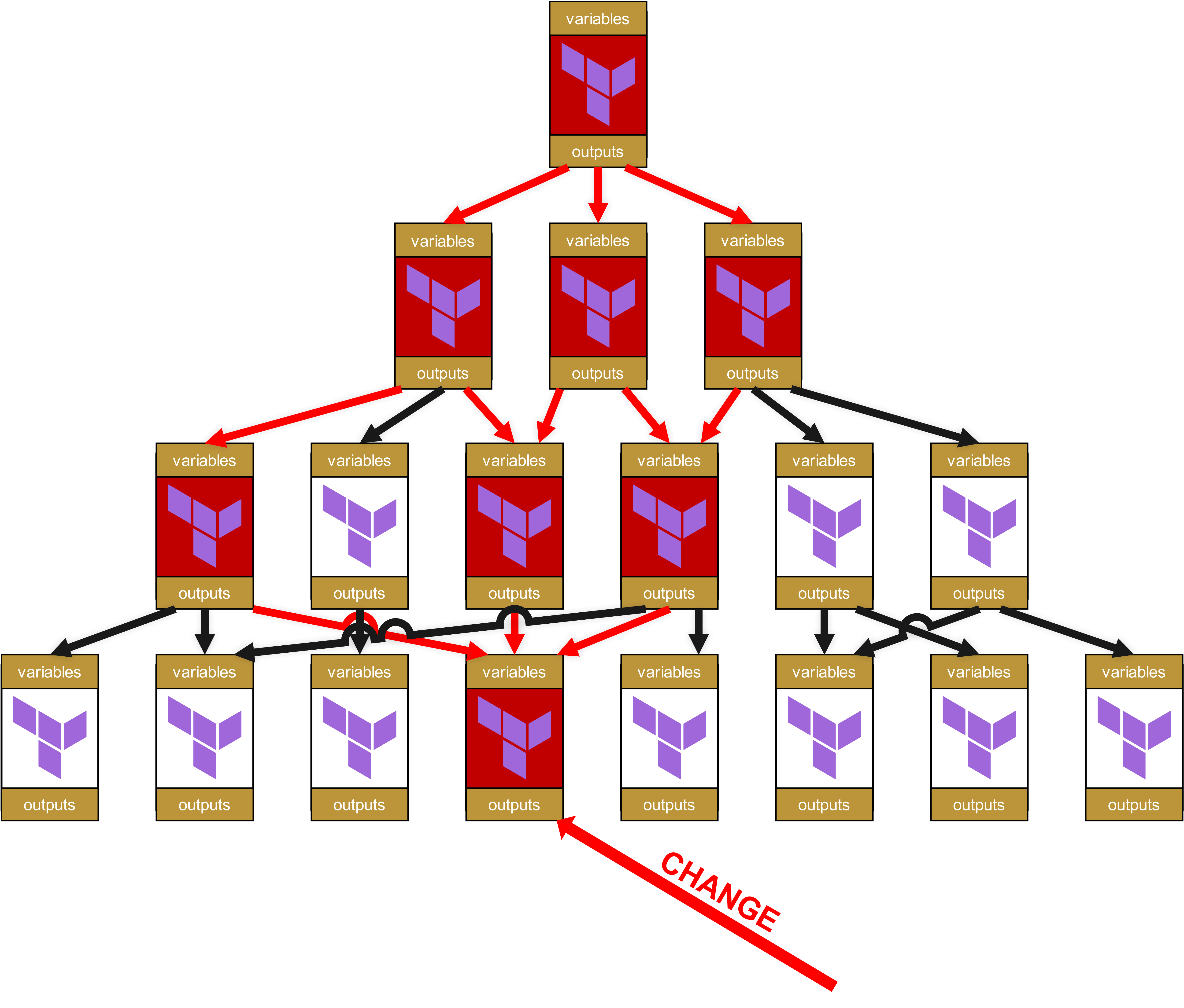

In this constellation, when a base module or a service module is patched and receives a new version number, this does not affect the functionality of your root module and the pipeline. Let us once again bring up this illustration from the previous chapter:

You can see here the four layers. Layer 1 is at the top, and at the bottom in layer 4 there is a change. Therefore, the module in layer 4 receives a new version number, for example an upgrade from v1.1.0 to v1.2.0.

But because the modules in layer 3 are still pinned to version v1.1.0, the new v1.2.0 has no effect. First, the modules in layer 3 must be successfully tested. There are then two possible scenarios for these tests:

- The service modules in layer 3 still function flawlessly -> the pinning of the base module is raised from v1.1.0 to v1.2.0 and the service modules are raised to a new minor release, e.g. from v1.3.4 to v1.3.5.

- The service modules in layer 3 no longer function flawlessly. This means that the base module v1.2.0 contained a breaking change. Therefore, the affected service modules in layer 3 are patched and re-versioned accordingly, this time as a major release, for example from v1.3.4 to v1.4.0. With this version jump to a major release, the maintainers of the modules in the next higher layer 2 then also clearly see that it is an important functional update and not just a change of a dependency.

With strategy 2 using Terraform Enterprise, the consequence would also be that minor updates, in the described case from v1.3.4 of the service module to v1.3.5, are then transparently passed on to the next higher layer. This again has consequences:

- With Terraform Enterprise and its integrated private module registry you save yourself unnecessary lifecycle management in dependent modules. This reduces personnel effort and risk of error -> immediate, additional added value and therefore one of the enterprise features that HashiCorp or IBM charges for.

- This also means that you must strictly follow rules in versioning and pay attention: What exactly is a minor update? How does a minor update differ from a major update? You therefore need a plan, consequently a defined structure and internal compliance in versioning, you can no longer ride around like a cowboy in the green fields and shoot cool-looking version numbers into the air. We will look at how to do this correctly later in part 7 of this article series.

The rate-of-change trap in module dependencies

A frequently overlooked problem with nested modules is the cumulative change risk. The deeper the module hierarchy, the higher the probability of unexpected changes. With the introduction of NIS2 at the latest, risk management will become mandatory, so let us briefly consider this in context. We are using a simplified model with percentages, not a truly professional and mathematically reliable risk calculation, because the point is for you to quickly understand what this topic is about.

With a flat hierarchy it is trivial to specify a change frequency within the framework of operational guidelines.

A classic example: "We have a maintenance window every Tuesday afternoon from 3 p.m. to 6 p.m.".

In practice this means that there could only be a problem in operations every Tuesday afternoon (and perhaps also Wednesdays). Once per week, so at most 52 times a year (we will leave aside the impact and length of an outage, the rough calculation is sufficient).

If we now subtract holidays and frozen zones at Christmas and Easter, we arrive at around 34 potential operational disruptions. 1 / 34 = 0.0294, meaning we are dealing with a risk of about 3%.

With Infrastructure-as-Code and module dependencies it becomes somewhat more complicated, primarily in hip chaos environments under the cloak of alleged agility and continuous deployment. In reality, the 3% risk is no longer the whole story.

For example:

Root Module

├── Change probability: 10% (rare environment changes)

└── Service Module

├── Change probability: 30% (business logic updates)

└── Base Module

└── Change probability: 50% (provider updates, bugfixes)

Cumulative change probability = 1 - (0.9 × 0.7 × 0.5) = 68.5%

In this three-tier hierarchy, there is therefore a 68.5% chance that at least one component will change in a given period.

Now it should really be absolutely clear that you can no longer proceed like James Dean once did - in view of such numbers you really need to know what you are doing.

Conclusion

With some foresight and discipline, potentially brutal incidents with nested modules can therefore also be avoided from an operational perspective. As you have seen, however, this is still a risk that you must absolutely take into account in your risk management.

In the next part 6c we will then conclude this subtopic by looking at some advanced practices and recommended approaches such as Policy-as-Code. Thank you for accompanying me this far.