Retrieval-Augmented Generation (RAG) represents a significant evolution in Large Language Model (LLM) architecture, combining the power of parametric and non-parametric memory systems. At its core, RAG addresses one of the fundamental limitations of traditional LLMs: their reliance on static, pre-trained knowledge that can become outdated or may lack specific context needed for accurate responses. The RAG framework represents a significant leap forward in the quest to create language models and by integrating the power of retrieval, ranking, and generative techniques, RAG opens up new possibilities for AI systems that can engage in truly knowledgeable, context-aware communication.

This is part 1 of a multi-part series in which we look into RAGs, how to use them and how they work.

The original scientific treatment of Retrieval-Augmented Generation was published in May 2020 by Patrick Lewis and his colleagues with the title Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Patrick Lewis is a London-based Natural Language Processing Research Scientis who works at co:here, whose enterprise language models we at ICT.technology provide to customers in addition to the Open Source Llama models. If you are interested in the gory details of this crucial research, you can download a copy of the 2021 revision here: https://arxiv.org/pdf/2005.11401.pdf.

Part 1: The Fundamentals of Retrieval-Augmented Generation (RAG)

Imagine a vast library where artificial intelligence acts as both the librarian and the researcher. This is essentially what Retrieval-Augmented Generation (RAG) does - it transforms an AI from a system that can only recite memorized information into one that can actively search through, evaluate, and use information from a dynamic knowledge base.

Let's explore how this digital library system works.

How RAG Works: A High-Level Overview

In a traditional library, you have librarians who know how to find information, research assistants who evaluate sources, and experts who help interpret the findings. The work with library books - their content is static, it never changes. New books may be added from time to time, but most of them are old.

Think of RAG as having a brilliant librarian and researcher who doesn't just rely on memorized knowledge (like the library books) but has instant access to a vast, constantly updatable reference system. But unlike our traditional library analogy, this system operates on sophisticated mathematical principles and neural architectures.

The Library Staff at Work

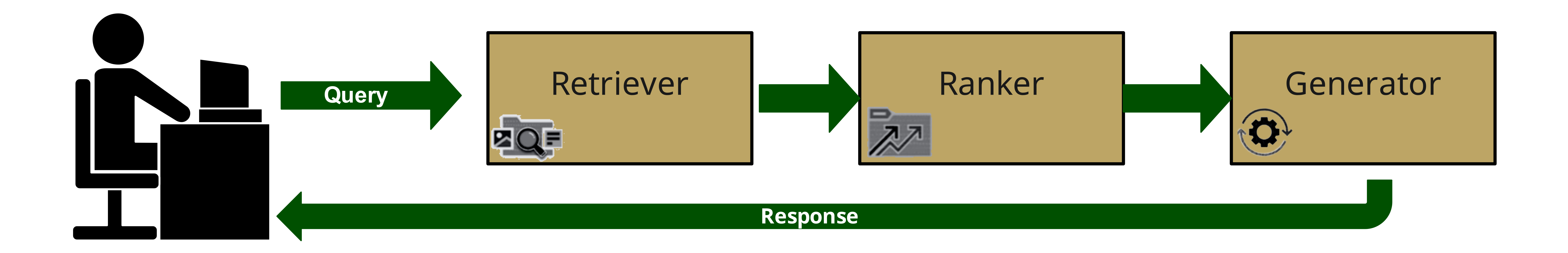

The RAG framework's sophistication comes from its three primary components, each built on advanced neural network architectures: The Retriever, the Ranker, and the Generator. These components work together seamlessly to provide contextually relevant, accurate, and informative responses.

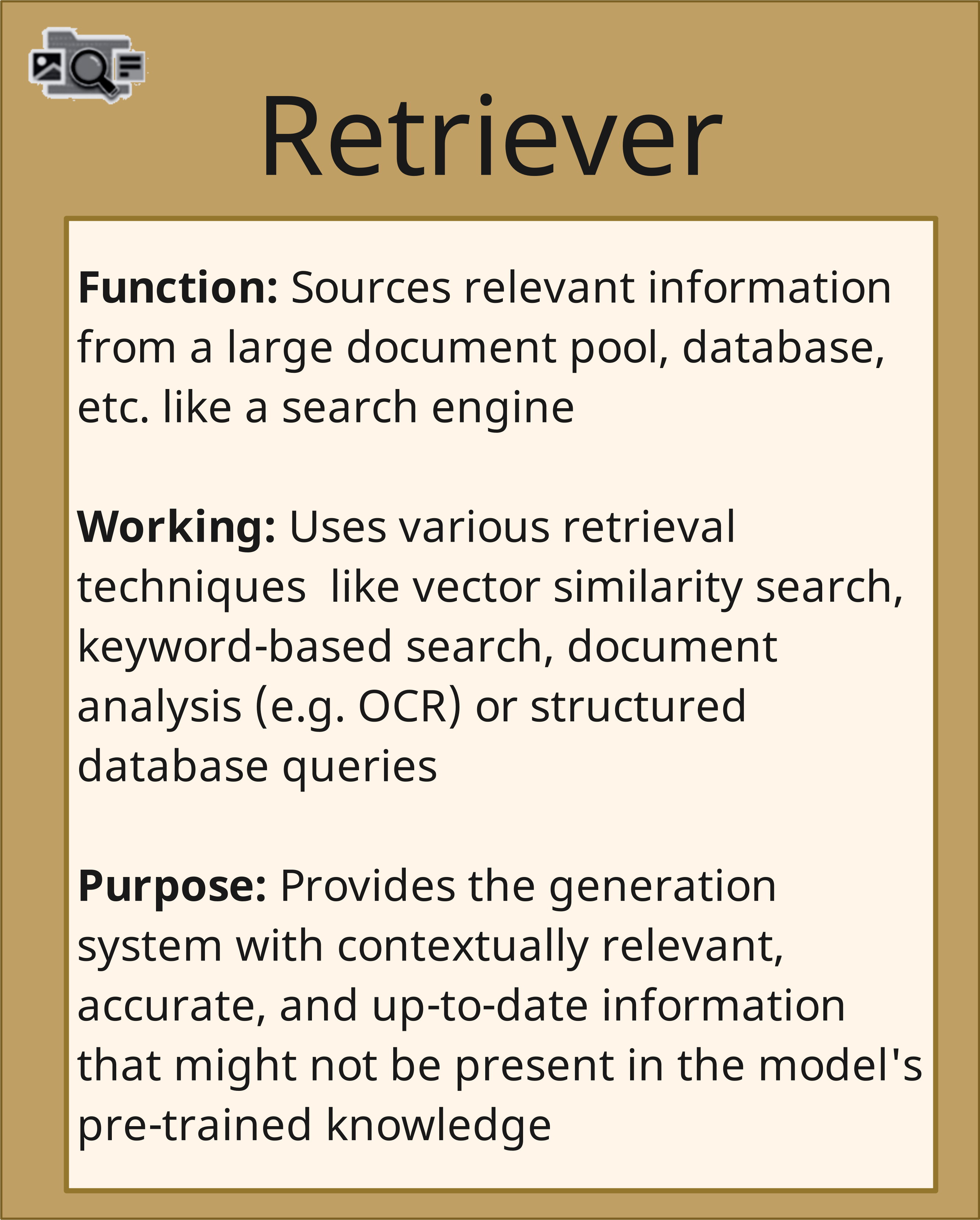

1. The Retriever: The Lightning-Fast Librarian

Our digital librarian doesn't walk through physical aisles - they navigate through vast databases of information at incredible speeds. When you ask a question, they:

Our digital librarian doesn't walk through physical aisles - they navigate through vast databases of information at incredible speeds. When you ask a question, they:

- Search through digital "shelves" using vector similarity (like finding books with similar themes)

- Use keyword matching (similar to checking book indexes, but instantaneously)

- Query structured databases (like consulting a perfectly organized card catalog)

- Employ semantic search (understanding what you're asking for, even if you use different words)

This digital librarian doesn't just look at titles - they scan through entire documents in milliseconds, finding relevant information that might be buried deep within the text. Its primary role is to scan through vast amounts of data, both structured and unstructured, to find content that is relevant to the query at hand.

When a user submits a query, the Retriever employs various retrieval techniques to gather potentially useful information:

- Vector similarity search: The Retriever represents both the query and the documents in the database as high-dimensional vectors (currently up to 1024 dimensions). It then uses cosine similarity or other distance metrics to find documents with vectors most similar to the query vector. This is analogous to a librarian finding books with similar themes or content.

- Keyword-based search: The Retriever looks for exact matches or variations of the keywords from the query within the document corpus. This is similar to a librarian checking book indexes or titles for specific terms.

- Structured database queries: If the information is stored in a structured format like a relational database, the Retriever can use SQL or similar query languages to fetch relevant data. This is akin to a librarian consulting a perfectly organized card catalog.

The goal of the Retriever is to cast a wide net and gather all potentially relevant information from a large corpus or database, using various retrieval techniques such as vector similarity search, keyword-based search, and structured database queries. Its purpose is to provide the generation system with contextually relevant, accurate, and up-to-date information that might not be present in the model's pre-trained knowledge. This gathered information will be further refined in the subsequent stages.

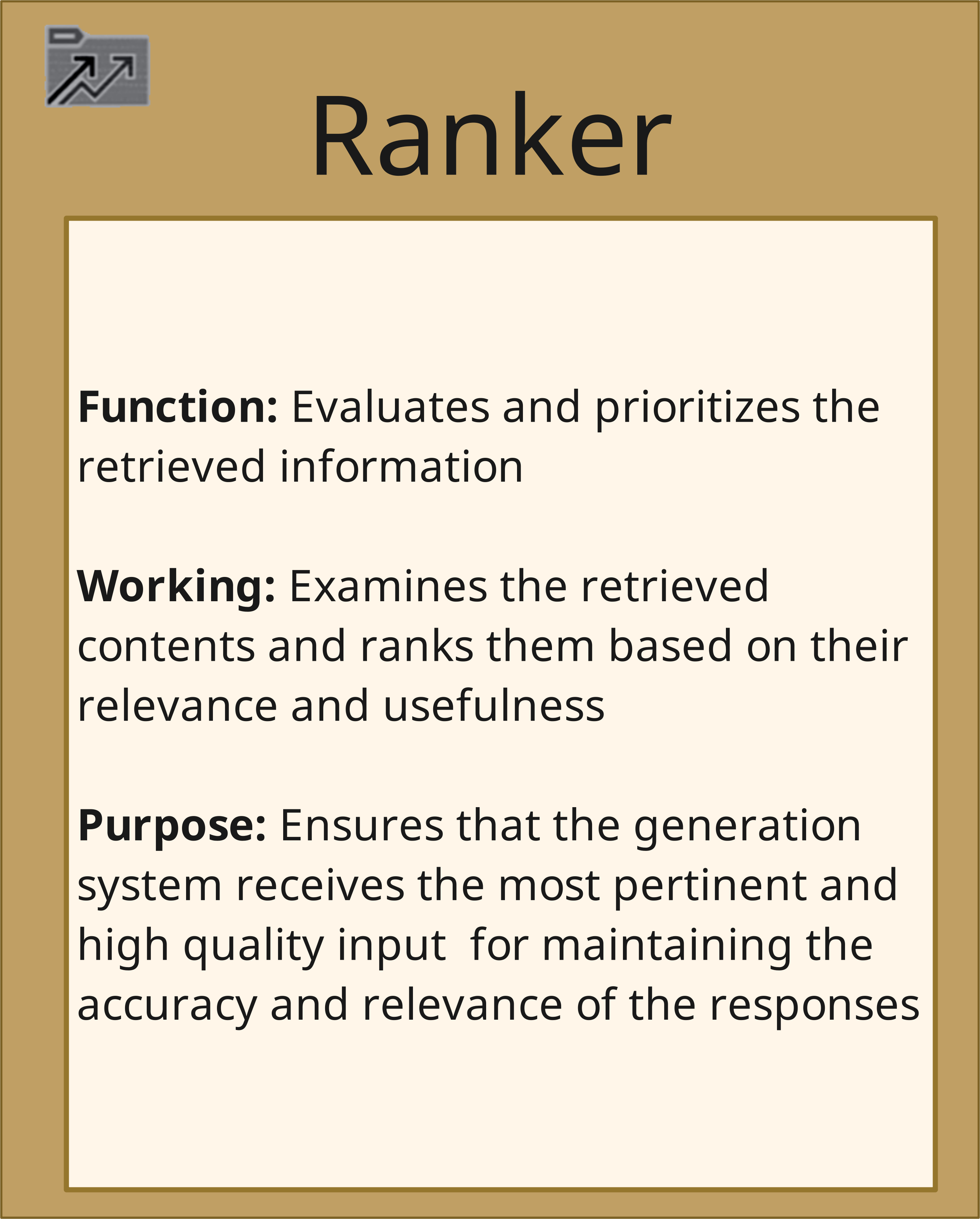

2. The Ranker: The Reference Desk Expert

After the retriever brings back potentially relevant information, the ranker takes on the role of an experienced reference librarian who evaluates each source. The Ranker uses various algorithms to determine the relevance and usefulness of each piece of retrieved information in answering the given query. Some of the factors the Ranker considers include:

After the retriever brings back potentially relevant information, the ranker takes on the role of an experienced reference librarian who evaluates each source. The Ranker uses various algorithms to determine the relevance and usefulness of each piece of retrieved information in answering the given query. Some of the factors the Ranker considers include:

- How relevant is this information, how well does the information align with the intent and context of the user's question?

- How reliable is this source?

- Is the information up-to-date?

- How well does this explain what we need to know?

- Are there any contradictions or gaps that need to be filled between sources?

Just as a good reference librarian knows which sources to prioritize, the ranker ensures that only the most valuable information moves forward. Once the Retriever has gathered a pool of potentially relevant documents, the Ranker steps in to evaluate and also prioritize this information the Retriever found in the various document pools, company databases, and other sources.

By effectively ranking the retrieved information, the Ranker ensures that the Generator receives the most pertinent and high-quality input. This step is crucial for maintaining the accuracy and relevance of the final response.

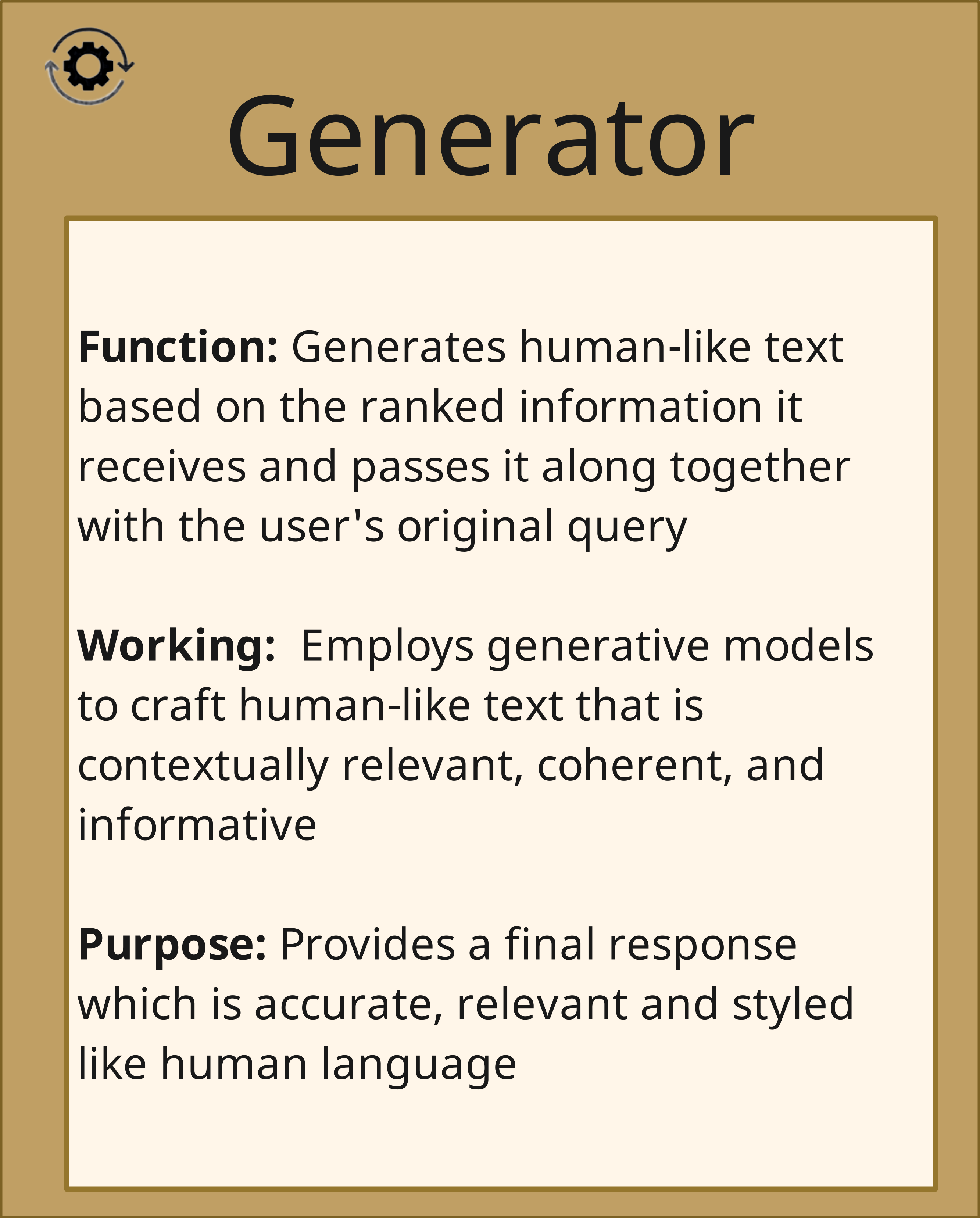

3. The Generator: The Research Synthesizer

Think of the generator as a skilled research assistant who takes all the carefully selected materials and compiles them into a clear, comprehensive response. It is the final component of the RAG framework and crucial for maintaining the accuracy and relevance of the final response. The Generator does:

Think of the generator as a skilled research assistant who takes all the carefully selected materials and compiles them into a clear, comprehensive response. It is the final component of the RAG framework and crucial for maintaining the accuracy and relevance of the final response. The Generator does:

- Combine information from multiple sources cohesively

- Maintain consistency throughout the explanation

- Present technical details accurately

- Format the information appropriately for the query

- Resolve any conflicts between different sources

The Generator is typically a sequence-to-sequence (seq2seq) model, which is a type of neural network architecture that can generate output sequences based on input sequences. In the context of RAG, the input sequence is the user's query along with the top-ranked retrieved information, and the output sequence is the generated response.

Seq2seq models consist of two main components:

- Encoder: The encoder processes the input sequence (query + retrieved information) and converts it into a fixed-size vector representation that captures the essential information. This is similar to a researcher reading through the selected sources and mentally summarizing the key points.

- Decoder: The decoder takes the encoded representation and generates the output sequence (response) one token at a time, based on the input and its own previous outputs. This is akin to the researcher composing a response based on their understanding of the query and the relevant information.

The Generator uses the power of seq2seq models to craft human-like responses that are not only factually accurate and relevant but also coherent, fluent, and styled in a way that is typical of human language. It ensures that the final response seamlessly integrates the retrieved information into a natural, conversational flow.

RAG in Action: A Library Analogy

To better understand how the RAG framework operates, let's revisit our library analogy and see how the Retriever, Ranker, and Generator components map to the roles of library staff. Just as a well-functioning library depends on the seamless collaboration of librarians, reference desk experts, and research assistants, the effectiveness of the RAG framework relies on the harmonious interplay of the Retriever, Ranker, and Generator components.

To better understand how the RAG framework operates, let's revisit our library analogy and see how the Retriever, Ranker, and Generator components map to the roles of library staff. Just as a well-functioning library depends on the seamless collaboration of librarians, reference desk experts, and research assistants, the effectiveness of the RAG framework relies on the harmonious interplay of the Retriever, Ranker, and Generator components.

When someone comes to our digital library with a question, here's what happens:

1. Initial Query Processing

When a patron comes with a query, the system first receives your question.

Just like a librarian might ask clarifying questions, the system first analyzes what you're really looking for. For this, it considers any previous conversation context (like a librarian remembering your research topic of your last visit).

2. The Search Process

The Retriever is like a fast-paced librarian who knows the library's collection inside out. Like the librarian, the retriever swiftly navigates through the shelves, databases, and catalogs to gather all the books, articles, and other resources that might contain relevant information.

The Retriever searches through the digital collections (which do not only include document pools, but also the relational databases of your company and other media).Multiple search strategies might be used simultaneously. Relevant materials are quickly gathered and information about the sources is noted.

Finally, the librarian returns from the archives with a vast collection of material and now it must be determined which of the information within does really matter.

3. Evaluation and Selection

The Ranker is like the reference desk expert who takes the stack of materials from the librarian and evaluates each one. They consider factors like the publication date, the authority of the author, and how well the content aligns with the patron's needs. They sort the materials based on their relevance and quality, setting aside the most useful sources.

For this, the Ranker evaluates all retrieved materials and all sources are prioritized based on the relevance of the content and the reliability of the source. Duplicate information is consolidated and finally, the most valuable sources are selected.

4. Creating the Response

The Generator is like a seasoned research assistant who takes the curated stack of materials and synthesizes the information into a comprehensive, well-structured response. They know how to extract key points, connect ideas from different sources, and present the information in a clear, engaging manner. They ensure that the final product is not just a collection of facts but a coherent, contextually appropriate answer to the patron's question.

To accomplish this, all the information which was selected by the Ranker is organized. Then a coherent response is crafted. In the next step, the answer is checked against the sources again. If approved, the final response is presented to the customer.

Why This Digital Library System Matters

The RAG framework brings several significant advantages to the realm of language models:

The RAG framework brings several significant advantages to the realm of language models:

- Extensibility: By decoupling the knowledge retrieval process from the pre-trained language model, RAG allows for easy integration of new, up-to-date information. The collection is always expanding and new information can be added anytime; an LLM would have to be re-trained each time new content is added, resulting in knowledge cut-offs at certain dates. As a result, responses are based on actual sources rather than just "memory" and the library can be updated without rebuilding the entire system.

- Specialization: RAG models can be tailored to specific domains or use cases by curating the document corpus they draw from. This enables the creation of powerful, domain-specific question-answering systems.

- Interpretability: The RAG framework provides a clear trail of the information used to generate a response. Every piece of information can be traced back to its source. This transparency can be crucial in applications where the provenance of information is important, such as in medical or legal contexts.

- Efficiency: By using a combination of retrieval techniques and ranking algorithms, RAG can quickly sift through vast amounts of information and focus on the most relevant pieces, reducing the computational burden on the Generator.

Real-World Applications

Just as libraries serve many purposes, RAG systems are versatile:

- Technical documentation systems that stay current with the latest updates

- Customer service systems that can access and understand company policies

- Research tools that can find and synthesize information from multiple sources

- Educational systems that provide accurate, well-sourced explanations

RAG transforms AI from a system that simply remembers information into one that can actively research, evaluate, and present information - just like a world-class library system, but at digital speeds.As we continue to explore the intricacies of RAG in the subsequent parts of this article series, keep in mind this fundamental framework and how its components work together to create a system that is not just a storehouse of static knowledge, but an active, dynamic participant in the quest for understanding.