"To hell with visibility - as long as it works!"

That is the mindset with which most platform engineering teams rush headlong into disaster. It is like cooking blindfolded in a strange kitchen: it works for a while, but when something burns, it really burns.

And nothing is worse than the look of helpless faces when everything is on fire.

In the previous two parts, dependency pain was our main topic. Today, let us take a look at what options we have to rescue a child that has already fallen into the well - alive, if possible.

Options for visualizing module dependencies

The first problem in complex module hierarchies is visibility. On paper, there is terraform graph for this purpose:

terraform graph | dot -Tpng > graph.png

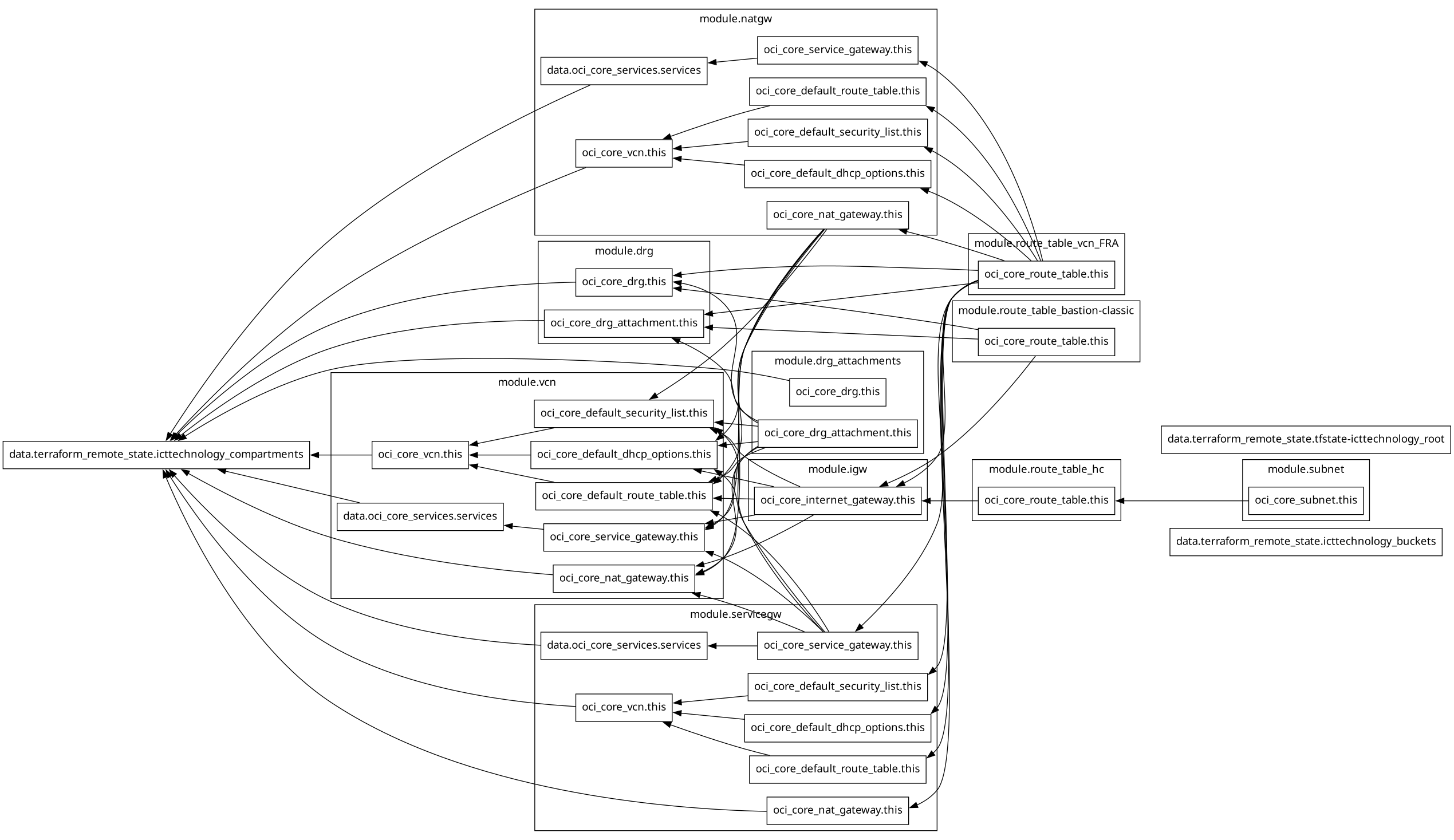

The output of Terraform’s terraform graph may be well-intentioned, but after conversion into a graphic it looks rather useless. Terraform does not support GraphML, GrapAR, TGF, GML, or JSON, but only the .dot format, which, while common in code generation and automation on Linux, is not supported by any enterprise application for modeling or data analysis when it comes to visualization.

To convert .dot into a graphic, you need the graphviz package, which then produces something like this:

In my view, this looks less like my network infrastructure and more like a pile-up at a Formula 1 race in Monaco, when everyone tries to take the first corner at the same time right after the start. Usability looks different.

And even if you manage - with a lot of manpower and fine-tuning - to turn this .dot file into a clear diagram, you can still make about as much practical use of it in enterprise environments as of the original generated .dot file, namely none.

That leaves terraform show, since this command lists all resources. But it will not help you much either, as it is also unable to visualize dependencies.

This is where a small script comes in, doing its best to somehow render the output of terraform graph in the shell. With this script, you immediately see that module X depends on remote state Y.

It is called terraform-graph-visualizer.sh, and instead of presenting you with more than 200 lines of Bash code here, I will simply give you the URL to the GitHub repository: GitHub - ICT-technology/terraform-graph-visualizer

The output from the graph above then looks like this (heavily shortened representation):

$ terraform graph | ~/bin/terraform-graph-visualizer.sh

╔══════════════════════════════════════════════════════════╗

║ TERRAFORM GRAPH VISUALIZATION ║

╚══════════════════════════════════════════════════════════╝ Analyzing: stdin (terraform graph) GRAPH STATISTICS

═════════════════

├─ Total Nodes: 96

├─ Total Edges: 63

├─ Modules: 10

└─ Data Sources: 3 TERRAFORM MODULES

══════════════════

├─ module.drg

│ ├─ oci_core_drg_attachment.this

│ └─ oci_core_drg.this ├─ module.drg_attachments

│ ├─ oci_core_drg_attachment.this

│ └─ oci_core_drg.this

[...] DATA SOURCES

═════════════

├─ data.terraform_remote_state.icttechnology_buckets

├─ data.terraform_remote_state.icttechnology_compartments

├─ data.terraform_remote_state.tfstate-icttechnology_root DEPENDENCY RELATIONSHIPS

═════════=═══════════════

┌─ data.terraform_remote_state.icttechnology_compartments

│ depends on:

│ ├─ module.drg.oci_core_drg.this

│ ├─ module.drg.oci_core_drg_attachment.this

│ ├─ module.drg_attachments.oci_core_drg.this

│ ├─ module.natgw.data.oci_core_services.services

│ ├─ module.natgw.oci_core_vcn.this

│ ├─ module.servicegw.data.oci_core_services.services

│ ├─ module.servicegw.oci_core_nat_gateway.this

│ ├─ module.servicegw.oci_core_vcn.this

│ ├─ module.vcn.data.oci_core_services.services

│ ├─ module.vcn.oci_core_nat_gateway.this

│ └─ module.vcn.oci_core_vcn.this

│

┌─ module.drg.oci_core_drg_attachment.this

│ depends on:

│ ├─ module.drg_attachments.oci_core_drg_attachment.this

│ ├─ module.route_table_bastion-classic.oci_core_route_table.this

│ └─ module.route_table_vcn_FRA.oci_core_route_table.this

[...] Graph visualization complete!

Still not beautiful, but at least readable. And apart from pure dependencies, you can also immediately see here:

- Remote state references

- Fan-in/fan-out of conspicuous modules

- Data source hotspots

Policy-as-Code: When Sentinel prevents the worst nonsense

With Terraform Enterprise, Sentinel is available as a Policy-as-Code engine. Sentinel ensures that corporate policies and regulatory requirements are adhered to - in other words, it prevents our truly foolish decisions before they can cause damage.

Sentinel policy for module version pinning

With a policy like this, you can enforce that modules are pinned to specific versions:

import "tfconfig/v2" as tfconfig

import "strings"

// Heuristic: source is VCS if it has a git:: prefix OR a URI scheme present

is_vcs = func(src) {

strings.has_prefix(src, "git::") or strings.contains(src, "://")

}

// extract ref from the query (if present)

ref_of = func(src) {

// very simple extraction: everything after "?ref=" until the end

// returns "" if not present

idx = strings.index_of(src, "?ref=")

idx >= 0 ? strings.substring(src, idx+5, -1) : ""

}

// Policy: all non-local modules must be versioned

mandatory_versioning = rule {

all tfconfig.module_calls as name, module {

// local modules (./ or ../) are excluded

if strings.has_prefix(module.source, "./") or strings.has_prefix(module.source, "../") {

true

} else if is_vcs(module.source) {

// VCS modules: ref must be vX.Y.Z

ref = ref_of(module.source)

ref matches "^v[0-9]+\\.[0-9]+\\.[0-9]+$"

} else {

// Registry modules: version argument must be X.Y.Z

module.version is not null and

module.version matches "^[0-9]+\\.[0-9]+\\.[0-9]+$"

}

}

}

// Detailed validation for OCI modules

validate_oci_modules = rule {

all tfconfig.module_calls as name, module {

if strings.contains(module.source, "oci-") {

if is_vcs(module.source) {

// strict path structure + SemVer tag

module.source matches "^git::.+//base/oci-.+\\?ref=v[0-9]+\\.[0-9]+\\.[0-9]+$"

} else {

module.version is not null and

module.version matches "^[0-9]+\\.[0-9]+\\.[0-9]+$"

}

} else {

true

}

}

}

main = rule {

mandatory_versioning and validate_oci_modules

}

That completes the first step.

One step further: Allow and deny lists using Policy-as-Code

If you want to take it one step further, a policy like the following one adds additional value. It allows you to introduce an allow list for module sources along with a deny list for faulty or vulnerable versions, optionally accompanied by “advisory” notices.However, you should not adopt this policy 1:1; instead, adapt it to your own use cases and, above all, to real existing versions and advisories, otherwise the rules will not take effect:

import "tfconfig/v2" as tfconfig

import "strings"

// Helper functions

is_local = func(src) {

strings.has_prefix(src, "./") or strings.has_prefix(src, "../")

}

is_vcs = func(src) {

strings.has_prefix(src, "git::") or strings.contains(src, "://")

}

ref_of = func(src) {

i = strings.index_of(src, "?ref=")

i >= 0 ? strings.substring(src, i+5, -1) : ""

}

// Allowlist of module sources

allowed_module_sources = [

"git::https://gitlab.ict.technology/modules//",

"app.terraform.io/ict-technology/"

]

// Banned lists separated for VCS tags and Registry versions

banned_vcs_tags = [

"v1.2.8", // CVE-2024-12345

"v1.5.2" // Critical bug in networking

]

banned_registry_versions = [

"1.2.8",

"1.5.2"

]

// Rule: only allowed sources OR local modules

only_allowed_sources = rule {

all tfconfig.module_calls as _, m {

is_local(m.source) or

any allowed_module_sources as pfx { strings.has_prefix(m.source, pfx) }

}

}

// Rule: no banned versions (VCS: tag in ref, Registry: version argument)

no_banned_versions = rule {

all tfconfig.module_calls as _, m {

if is_local(m.source) {

true

} else if is_vcs(m.source) {

t = ref_of(m.source)

not (t in banned_vcs_tags)

} else {

// Registry

m.version is string and not (m.version in banned_registry_versions)

}

}

}

// Advisory: warning for old major versions (set policy enforcement level to "advisory")

warn_old_modules = rule {

all tfconfig.module_calls as _, m {

if is_local(m.source) {

true

} else if is_vcs(m.source) {

r = ref_of(m.source)

// If v1.*, then print warning, rule still passes

strings.has_prefix(r, "v1.")

? (print("WARNING: Module", m.source, "uses v1.x - consider upgrading to v2.x")) or true

: true

} else {

// Registry

m.version is string and strings.has_prefix(m.version, "1.")

? (print("WARNING: Module", m.source, "uses 1.x - consider upgrading to 2.x")) or true

: true

}

}

}

// Main rule: hard checks must pass

main = rule {

only_allowed_sources and no_banned_versions

}

Testing Framework: Automated guardrails in Terraform 1.10+

Sentinel policies are one thing - but sometimes you want to check project-specific rules right where the code lives. Since Terraform 1.10, the native testing framework has been available for this purpose. It allows you to write small, focused tests that harden your modules against misconfigurations. No external engine, no overhead - simply declarative within the project.

# tests/guardrails.tftest.hcl

variables {

max_creates = 50

max_changes = 25

}

run "no_destroys_in_plan" {

command = plan

assert {

condition = length([

for rc in run.plan.resource_changes : rc

if contains(rc.change.actions, "delete")

]) == 0

error_message = "Plan contains deletions. Please split the change or use an approval workflow."

}

}

run "cap_creates" {

command = plan

# Counts pure creates, not replacements

assert {

condition = length([

for rc in run.plan.resource_changes : rc

if contains(rc.change.actions, "create") && !contains(rc.change.actions, "delete")

]) <= var.max_creates

error_message = format(

"Too many new resources (%d > %d) in one run – split into smaller batches.",

length([for rc in run.plan.resource_changes : rc if contains(rc.change.actions, "create") && !contains(rc.change.actions, "delete")]),

var.max_creates

)

}

}

run "cap_changes" {

command = plan

assert {

condition = length([

for rc in run.plan.resource_changes : rc

if contains(rc.change.actions, "update")

]) <= var.max_changes

error_message = "Too many updates on existing resources - blast radius is too high."

}

}

run "cap_replacements" {

command = plan

assert {

condition = length([

for rc in run.plan.resource_changes : rc

if contains(rc.change.actions, "create") && contains(rc.change.actions, "delete")

]) == 0

error_message = "Plan contains replacements (create+delete) - please review and minimize them."

}

}

This way, you kill two birds with one stone: your engineers can verify at project level that modules are properly versioned, and your CI/CD pipeline gains an additional safety belt. If something blows up here, at least it happens early, before it hits production.

But a bit of explanation is in order.

Why it works is quite simple:

- Terraform executes a plan for every run. The testing framework provides the result as structured data under run.plan. This is not a plain text dump but an object that includes, among other things, resource_changes.

- run.plan.resource_changes is a list in which each entry corresponds to a planned resource operation. Each entry contains change.actions, a list of actions that Terraform plans for that resource. Possible contents include:

- only "create" if a resource is newly created,

- only "update" if an existing resource is modified,

- "create" and "delete" together if Terraform replaces the resource, that is, deletes it first and then creates it again. This is the classic replacement.

- The assertions are simple counting rules over this list:

- no_destroys_in_plan filters all entries whose actions contain the string "delete". If the resulting set is not empty, the test fails. This prevents destructive deletions in the plan.

- cap_creates counts only genuine new resources, that is, entries with "create" but without "delete". Replacements are therefore deliberately excluded from this count. The error message uses format(...) so that embedded quotation marks do not cause syntax problems and you can see both the actual and threshold values.

- cap_changes limits the number of updates, i.e. modifications to existing resources.

- cap_replacements explicitly captures risky replacements, the combination of "create" and "delete" on the same resource. These cases often pose the greatest risks, as they can temporarily cause downtime or side effects.

- The variables { ... } section at the beginning defines thresholds available in all runs. This allows you to adapt the policy per pipeline or environment without changing the test logic itself.

- The test does not require any project-specific constructs. It works solely with the standardized plan model provided by Terraform. This makes it portable and (hopefully) immediately usable - unless I have accidentally introduced any mistakes again.

If you also want to test expected precondition failures, you can do so, but you will need named check blocks in your code. Example:

# In modules or root

check "api_limits_reasonable" {

assert {

condition = var.instance_count <= 200

error_message = "Instance-Batch zu groß."

}

}

# and a test which deliberately violates the precondition

run "expect_api_limit_breach" {

command = plan

variables {

instance_count = 1000

}

expect_failures = [ check.api_limits_reasonable ]

}

Without such named checks, a corresponding test would point to nothing. The testing framework is not suitable for module versioning or version constraints, because it inspects plan contents rather than module metadata. For that purpose, Sentinel policies or a CI script that analyzes module sources and ?ref= tags or version constraints are better suited.

After the horse has bolted

Let us assume your state is already corrupted and there are incorrect dependencies. Then it may be time for extraordinary measures. Here is an emergency solution for the truly tough ones, again provided only as a link to the Git repository: Github - ICTtechnology/module-state-recovery

The script relieves you of the tedious manual work and automatically tracks down the modules that cause trouble in the Terraform state. It dutifully creates a backup, executes a plan run, outputs it as JSON, and inspects it closely to determine which modules are responsible for the issues. The affected resources are shown in a preview. If you wish, you can even use a mapping file to automatically move state addresses cleanly before the rest is tidied up. Under normal circumstances, nothing bad happens – everything runs in dry-run mode. Only when you deliberately start with CONFIRM=1 and I_UNDERSTAND=YES does the script actually intervene - but then properly and with a heavy axe. It is therefore a helpful tool for training and testing to understand and resolve module conflicts. However, it is explicitly not intended for productive use.

Checklist for robust module dependencies

✅ Version strategy

[ ] Semantic Versioning for all base and service modules. Anyone who gets creative here will pay for it later.

[ ] Pinning strategy per module level (root, service, base). Whoever writes "latest" has already lost.

[ ] A compatibility matrix for module versions exists and is maintained.

[ ] A dependency lock mechanism or CI scripts for version checking are integrated.

✅ Governance

[ ] Module version tracking is integrated into CI/CD.

[ ] Sentinel policies for allowed module sources and version ranges are established.

[ ] Allow and deny lists for known faulty module versions are set up.

[ ] Breaking-change notifications (release notes, advisory mechanisms) are established.

[ ] The module inventory is regularly updated and reviewed.

✅ Monitoring

[ ] Audit logging for module updates and state changes is enabled.

[ ] Early warning systems for destructive changes (plan analysis, exit codes) are implemented. If these do not exist, you will only see the explosion in production.

[ ] Alerting for unexpected module changes or dependency drift is in place and active.

✅ Recovery

[ ] A procedure for state surgery and address migration is defined, documented, and communicated.

[ ] A rollback strategy for faulty module updates is in place.

[ ] Emergency response playbooks for critical module issues are created and communicated.

[ ] Team training for troubleshooting module dependencies is established. Yes, you will want all of this once your automated infrastructure reaches a certain size - even against the resistance of some engineers who consider themselves seniors.

Because nothing is worse than helpless faces when everything is on fire.