"Versioning? Oh, I just always use the latest version - what could possibly go wrong?"

This very attitude is one of the reasons why some engineers get woken up by a phone call at 3 a.m. And why, the next morning, people stare awkwardly at their shoes during the daily stand-up.

Before it happens to you too, let’s briefly talk about this in the final part of our “Terraform @ Scale” series.

After discussing dependencies, blast radius, and testing in the previous parts of this series, today we turn to a topic that is often underestimated: the professional versioning of Terraform modules. Because a module without clean versioning is like a car without its annual inspection - it may run fine for a while, but sooner or later, you’re going to have a real problem.

Why module versioning is essential

Imagine this: a central network module is used by 42 different projects. The original developer has left the company, their documentation consisted of “see code”, and now the module urgently needs to be updated. Without clear versioning, you have no idea:

- Which projects are using which version of the module

- Whether an update introduces breaking changes for existing deployments

- How to perform a rollback if something goes wrong

- When the module was last changed and why

The result? The famous 3 a.m. escalation, because no one knows which dependencies exist where. Then begins the great guessing game, while the infrastructure burns.

Professional module versioning is not a “nice-to-have” for perfectionists. It is the foundation that keeps your Infrastructure-as-Code maintainable, even with 100+ projects - without needing an archaeology degree to find out what was done when and why.

Semantic Versioning - the lingua franca of versioning

Semantic Versioning (SemVer) is the de facto standard for software versioning, and thus the only reasonable choice for Terraform modules. The schema is simple: MAJOR.MINOR.PATCH (e.g. v1.3.7).

![]()

The three components in detail

MAJOR version (1.x.x) - The big hammer:

Increased when introducing breaking changes - changes that will necessarily break existing code. Examples:

- A required variable is renamed or removed

- An output is deleted that other modules depend on

- The resource structure changes such that a terraform state mv is required

- Provider requirements change incompatibly (e.g. from AWS Provider 4.x to 5.x)

Rule of thumb: If users of the module MUST adjust their Terraform configuration, it is a major update.

MINOR version (x.1.x) - The friendly enhancement:

Increased when new backward-compatible features are added. Examples:

- New optional variables with sensible defaults

- Additional outputs that no one has missed so far

- New optional submodules or resources

- Performance improvements without functional changes

Rule of thumb: Existing code continues to work, but new options are available. Updating is optional but recommended.

PATCH version (x.x.1) - The bug fix:

Increased when fixing bugs that do not change functionality. Examples:

- Fixing typos in outputs or variable descriptions

- Fixes for race conditions or timing issues

- Documentation updates

- Correction of obviously wrong default values

Rule of thumb: Pure bug fixes. Updates should always be safe.

Pre-release and build metadata

SemVer allows additional labels for beta versions or build information:

v1.2.3-beta.1 # Beta version v1.2.3-rc.2 # Release candidate v1.2.3+build.42 # Build metadata (ignored during version comparisons) v2.0.0-alpha.1 # Alpha version of a major update

These pre-release versions are intended for development and testing. They have no place in production environments - although some teams think otherwise and suffer accordingly.

Module upgrade scenarios and their pitfalls

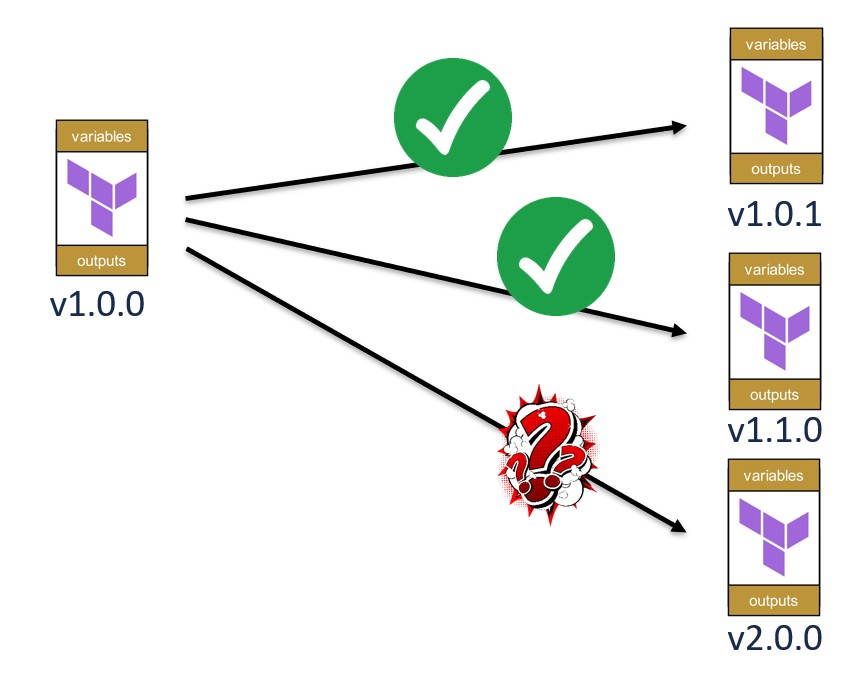

The theory is nice. Practice, as usual, is more complicated. Let’s look at what happens when you want to upgrade a module from version v1.0.0 to various newer versions.

The gentle patch update (v1.0.0 → v1.0.1)

This should be the easiest exercise of all. A bug fix, no breaking changes, nothing dramatic. In theory, you simply run terraform init -upgrade and you’re done.

In practice, you may stumble upon:

- Provider lock files: Your .terraform.lock.hcl may contain locked hashes incompatible with the bug fix

- State drift: The bug fix corrects an error that already manifested in the state - now Terraform wants to modify resources

- Downstream dependencies: Other modules that use your module have their own lock files

Best practice: Even for patch updates, always perform a plan run in a test environment. Not out of paranoia, but out of experience.

The friendly minor update (v1.0.0 → v1.1.0)

New features, backward compatible. Sounds good. But “backward compatible” does not mean “without side effects”. New optional variables have defaults, and those defaults will be applied whether you want them or not.

Example scenario:

# v1.0.0 - Original Version

resource "oci_core_vcn" "this" {

compartment_id = var.compartment_id

cidr_block = var.cidr_block

display_name = var.display_name

}

# v1.1.0 - New optional Features

resource "oci_core_vcn" "this" {

compartment_id = var.compartment_id

cidr_block = var.cidr_block

display_name = var.display_name

# NEW: Optional DNS Configuration

dns_label = var.dns_label # Default: null

# NEW: Optional IPv6 Support

is_ipv6enabled = var.enable_ipv6 # Default: false

}

What happens during the update? Terraform detects new arguments in the resource. Even if the defaults are “null” or “false”, Terraform must evaluate whether something has changed. Depending on the provider and resource, this can result in an in-place update or even a replacement.

Provider-specific detail: With some providers, a new “false” default can still trigger a plan change if the provider treats the “unset” state differently from explicitly “false”. This is especially relevant for boolean attributes added later to existing resources.

Best practice: Test minor updates extensively in DEV environments. Review the plan output for unexpected changes. And yes, that means actually READING the plan, not just skimming it.

The dreaded major update (v1.5.0 → v2.0.0)

Now it gets serious. Breaking changes mean your existing code WILL break. The only question is where and how badly.

Typical scenarios for major updates:

- Variable renamed: subnet_ids is now subnet_id_list

- Output structure changed: What used to be a list is now a map

- Resource addresses have shifted: State migration required

- Provider requirements increased: OCI Provider 5.x → 6.x

The symbol with the red question mark in the graphic above is no coincidence. With major updates, it may happen that a direct upgrade from v1.5.0 to v2.0.0 simply doesn’t work. The reason: too many breaking changes at once.

Best practice: Read the release notes. All of them. Completely. Create a migration checklist. Test in isolated environments. Plan rollback strategies. And expect the unexpected.

Version Pinning - The art of controlled chaos

Now it gets practical. How do you pin modules to specific versions? Terraform provides several mechanisms, which vary depending on the module’s source.

Git-based modules with the ref parameter

For modules in Git repositories, the ?ref= parameter is the standard approach:

# Via Git-Tag (minimum for production environments)

module "vpc" {

source = "git::https://github.com/your-org/terraform-modules.git//vpc?ref=v1.2.3"

compartment_id = var.compartment_id

cidr_block = "10.0.0.0/16"

}

# Via Branch (only fpr development!)

module "vpc_dev" {

source = "git::https://github.com/your-org/terraform-modules.git//vpc?ref=develop"

compartment_id = var.dev_compartment_id

cidr_block = "172.16.0.0/16"

}

# Via Commit Hash (for maximum security, recommended)

module "vpc_immutable" {

source = "git::https://github.com/your-org/terraform-modules.git//vpc?ref=a1b2c3d4"

compartment_id = var.compartment_id

cidr_block = "192.168.0.0/16"

}

Important: The ?ref= comes AFTER the path to the module (//vpc), not before it. This mistake is surprisingly common and leads to cryptic error messages.

Note on commit hashes: When using commit hashes, always use the full hash. Short 7-character hashes may not be resolvable depending on your Git server configuration and can cause errors during terraform init.

Terraform Registry with version constraints

For modules from public or private Terraform registries, use the version parameter:

# Exact Version (maximum pinning)

module "vpc" {

source = "oracle-terraform-modules/vcn/oci"

version = "3.5.4"

compartment_id = var.compartment_id

vcn_cidr = "10.0.0.0/16"

}

# Pessimistic Constraint Operator (recommended)

module "vpc_safe" {

source = "oracle-terraform-modules/vcn/oci"

version = "~> 3.5.0" # Allows 3.5.x, but not 3.6.0

compartment_id = var.compartment_id

vcn_cidr = "10.0.0.0/16"

}

# Range Constraints (allows more flexibility)

module "vpc_range" {

source = "oracle-terraform-modules/vcn/oci"

version = ">= 3.5.0, < 4.0.0" # All 3.x Versions, starting with 3.5.0

compartment_id = var.compartment_id

vcn_cidr = "10.0.0.0/16"

}

Version constraint operators - the fine print

Terraform supports various operators for version constraints:

- = or none: Exact version (e.g. = 1.2.3 or 1.2.3)

- !=: All versions except this one (e.g. != 1.2.3)

- >, >=, <, <=: Comparison operators (e.g. >= 1.2.0)

- ~>: Pessimistic constraint - allows only increments of the rightmost specified version component (e.g. ~> 1.2.0 allows >= 1.2.0 and < 1.3.0)

The ~> operator deserves special attention. It allows increments only for the rightmost specified component:

~> 1.2 # Allows >= 1.2.0 and < 2.0.0 (all minor and patch versions in major 1) ~> 1.2.0 # Allows >= 1.2.0 and < 1.3.0 (all patches in minor 1.2) ~> 1.2.3 # Allows >= 1.2.3 and < 1.3.0 (all patches starting with 1.2.3 in minor 1.2)

For production environments, we recommend: Exact versions or ~> with patch specification (e.g. ~> 3.5.0), to allow only patch releases within a minor version. If you also want to allow automatic minor upgrades, use ~> 3.5 - but that permits all versions up to < 4.0.0. Anything else will sooner or later lead to unexpected surprises.

Version pinning in large environments - the hard reality

In small setups, you can manually pin each module to a specific version. But that results in micromanagement and does not scale.

With 5 projects, it is still manageable.

With 50 projects, it becomes tedious.

With 500 projects, it becomes impossible.

The Git URL pinning minimum

In Terraform Open Source, for Git-based modules you are limited to using the ?ref= parameter. This is the absolute minimal solution. It works, but you have to manually:

- Add a ?ref= to every module call

- Find and update all affected locations in the code when performing updates

- Hope that no one forgets to add the ?ref=

For serious environments, this is not sustainable. It’s like hammering screws into the wall - technically possible, occasionally tolerable in rare exceptions, but generally a very bad idea. Beyond a certain size of your IaC codebase, you need more professional solutions.

The registry solution with version constraints

Better, but still not perfect: a private Terraform Registry with version constraints. You can control which versions are allowed:

# In versions.tf

terraform {

required_version = ">= 1.10.0"

required_providers {

oci = {

source = "oracle/oci"

version = "~> 6.0"

}

}

}

# In main.tf

module "vcn" {

source = "your-company.com/modules/oci-vcn"

version = "~> 2.1.0" # Allows Patches, but not minor Upgrades

compartment_id = var.compartment_id

cidr_block = "10.0.0.0/16"

}

The problem: you still have to go through all repositories and update the version constraints whenever you want to perform a breaking change update. With 100+ repositories, that becomes a full-time job.

Version constraints for modules - the enterprise approach

In Terraform Enterprise or Terraform Cloud, you can define version constraints for modules on the workspace level or even globally. This is the professional solution for large environments:

# Sentinel policy for module versioning

import "tfconfig/v2" as tfconfig

# Define allowed versions per module

allowed_module_versions = {

"oracle-terraform-modules/vcn/oci": {

"min_version": "3.5.0",

"max_version": "3.9.9",

},

"your-company.com/modules/oci-compute": {

"min_version": "2.0.0",

"max_version": "2.9.9",

},

}

# Verify all modul calles

import "versions"

mandatory_version_constraint = rule {

all tfconfig.module_calls as name, module {

# Only for registry sources which we want to manage explicitely

has_key(allowed_module_versions, module.source) implies (

module.version is not null and

func() {

v = allowed_module_versions[module.source]

c = versions.constraint(">= " + v.min_version + ", <= " + v.max_version)

versions.matches(c, module.version)

}()

)

}

}

With Sentinel, you can centrally control which module versions are allowed in which workspaces. An update to a new major release is then rolled out through a Sentinel policy change, not through modifications in each individual repository.

This is enterprise-grade version management. It costs money and requires setup effort, but it truly scales to hundreds of projects.

Testing module upgrades with the Terraform Testing Framework

You have updated a module. Now you need to verify that everything still works. Manual testing is for amateurs. Professionals automate.

The Terraform Testing Framework (available since Terraform 1.6 and significantly improved in 1.10+) provides exactly the right tools for this purpose. Here is a practical example for module upgrade tests:

Additional advantage: The Testing Framework can also act as a guardrail against excessively large batches - through tests that limit the number of planned resource changes. This indirectly mitigates API limit issues, which we have discussed in detail in earlier parts of this series.

Test scenario: VCN module upgrade from v2.5.0 to v3.0.0

# tests/upgrade_v2_to_v3.tftest.hcl

variables {

compartment_id = "ocid1.compartment.oc1..example"

vcn_cidr = "10.0.0.0/16"

vcn_name = "test-upgrade-vcn"

}

# Test 1: Existing v2.5.0 funktionality

run "test_v2_baseline" {

command = plan

module {

source = "oracle-terraform-modules/vcn/oci"

version = "2.5.0"

}

assert {

condition = length(output.vcn_id.value) > 0

error_message = "VCN ID should be generated in v2.5.0"

}

assert {

condition = output.vcn_cidr_block.value == var.vcn_cidr

error_message = "CIDR block mismatch in v2.5.0"

}

}

# Test 2: v3.0.0 breaking changes

run "test_v3_migration" {

command = plan

module {

source = "oracle-terraform-modules/vcn/oci"

version = "3.0.0"

}

# v3.0.0 renamed 'vcn_name' to 'display_name'

variables {

display_name = var.vcn_name # Neuer Parameter-Name

}

assert {

condition = length(output.vcn_id.value) > 0

error_message = "VCN ID should still be generated in v3.0.0"

}

assert {

condition = output.vcn_display_name.value == var.vcn_name

error_message = "Display name not correctly migrated to v3.0.0"

}

}

# Test 3: Backwards Compatibility Check

run "test_output_compatibility" {

command = plan

module {

source = "oracle-terraform-modules/vcn/oci"

version = "3.0.0"

}

variables {

display_name = var.vcn_name

}

# Check if critical outputs still exist

assert {

condition = (

can(output.vcn_id.value) &&

can(output.vcn_cidr_block.value) &&

can(output.default_route_table_id.value)

)

error_message = "Critical outputs missing in v3.0.0 - breaking downstream dependencies!"

}

}

These tests run with terraform test and fail if an upgrade introduces breaking changes that are undocumented or unhandled.

Integration with CI/CD

Tests are only as good as their automation. Here is an example for GitLab CI/CD:

# .gitlab-ci.yml

stages:

- test

- plan

- apply

terraform_test:

stage: test

image: hashicorp/terraform:1.10

script:

- terraform init

- terraform test -verbose

only:

- merge_requests

- main

terraform_plan:

stage: plan

image: hashicorp/terraform:1.10

script:

- terraform init

- terraform plan -out=tfplan

dependencies:

- terraform_test

only:

- main

artifacts:

paths:

- tfplan

terraform_apply:

stage: apply

image: hashicorp/terraform:1.10

script:

- terraform init

- terraform apply tfplan

dependencies:

- terraform_plan

only:

- main

when: manual

No successful tests, no plan. No successful plan, no apply. That is the kind of pipeline you want.

Additional tip: In addition to the tests, you should implement automated plan scans for destructive changes (delete/destroy), as covered in previous parts of this series. These early-warning systems complement the testing guardrails and help catch potential blast radius issues before they reach production.

Sentinel policies for version enforcement

Tests are good. Policies are better. Because tests can be bypassed if someone really wants to (or is too lazy). Sentinel policies in Terraform Enterprise cannot be bypassed - unless you are an administrator, in which case that is a completely different problem.

Policy: Enforce module versioning

import "tfconfig/v2" as tfconfig

import "strings"

# Helper function: is the source a VCS (git)?

is_vcs = func(src) {

strings.has_prefix(src, "git::") or strings.contains(src, "://")

}

# Helper function: Extract ref parameter from URL

ref_of = func(src) {

idx = strings.index_of(src, "?ref=")

idx >= 0 ? strings.substring(src, idx+5, -1) : ""

}

# Policy: All non-local modules MUST be versioned

mandatory_versioning = rule {

all tfconfig.module_calls as name, module {

# Local modules (./ oder ../) are exempt

not (strings.has_prefix(module.source, "./") or

strings.has_prefix(module.source, "../")) implies (

# For VCS sources: ?ref= must exist

is_vcs(module.source) implies length(ref_of(module.source)) > 0

and

# For registry sources: version must be set

not is_vcs(module.source) implies module.version is not null

)

}

}

# Main policy

main = rule {

mandatory_versioning

}

This policy rejects any terraform plan or apply where a module is not properly versioned. No exceptions. No mercy.

Policy: Block known faulty versions

import "tfconfig/v2" as tfconfig

# Deny-list for modules with known bugs

forbidden_module_versions = {

"oracle-terraform-modules/vcn/oci": ["3.2.0", "3.2.1"], # Bug in DHCP-Options

"your-company.com/modules/compute": ["1.5.0"], # Security Issue

}

# Policy: Disallow known faulty versions

deny_forbidden_versions = rule {

all tfconfig.module_calls as name, module {

has_key(forbidden_module_versions, module.source) implies (

module.version not in forbidden_module_versions[module.source]

)

}

}

main = rule {

deny_forbidden_versions

}

Now you have a mechanism to globally block critical module versions. If version 3.2.0 of the VCN module has a severe bug, block it in the policy. Done. No one can use it anymore, not even “by accident”.

Practical workflows for module updates

Theory is good. Practice is better. Here are proven workflows for different update scenarios.

Workflow 1: Patch update (e.g. v2.3.1 → v2.3.2)

- Communication: Announce in team chat, even if it’s “just” a patch

- Test in DEV: Update in the development environment, run terraform init -upgrade, review the plan

- Automated tests: Run terraform test

- Staging deployment: Roll out to staging, monitor for 24 hours

- Production rollout: Roll out to production during business hours (not Friday at 16:00)

- Documentation: Record update in the CHANGELOG and Confluence/Wiki

Duration: 1–3 days, depending on the size of the environment

Note: The duration estimates are based on experience and can vary significantly depending on compliance requirements (SOX, ISO, GDPR) and change management processes.

Workflow 2: Minor update (e.g. v2.3.2 → v2.4.0)

- Release notes review: Thoroughly review all new features and changes

- Impact analysis: Which new defaults become active? Are there unexpected side effects?

- Create test plan: Documented test scenarios for new features

- DEV testing: Extensive testing in development (at least 1 week)

- Canary deployment: Roll out to a small production subset

- Monitoring phase: 1 week of monitoring the canary deployment

- Full rollout: Gradual rollout over several days/weeks

- Postmortem review: Document lessons learned

Duration: 2–4 weeks, depending on risk assessment

Workflow 3: Major update (e.g. v2.4.0 → v3.0.0)

- Breaking changes inventory: Create a complete list of all breaking changes

- Migration guide: Step-by-step instructions for each breaking change

- Dependency analysis: Identify which downstream modules are affected

- Set up test environment: Dedicated environment for migration testing

- Test state migration: Terraform state surgery if needed

- Rollback strategy: Documented plan for the worst case

- DEV migration: Complete migration in development with testing

- Staging migration: Migration in staging with 2 weeks of monitoring

- Go/No-Go meeting: Formal decision based on test results

- Production migration: Planned maintenance window, all hands on deck

- Post-migration monitoring: Intensive monitoring for 2 weeks

- Documentation update: Update all documentation to the new version

Duration: 1–3 months, depending on complexity and scale

Checklist: professional module versioning

✅ Basics

[ ] Semantic Versioning is consistently applied for all modules

[ ] Git tags are created for every version (not only for major releases)

[ ] CHANGELOG.md is updated for every release

[ ] Release notes are clear and complete (not just “minor fixes”)

[ ] Breaking changes are explicitly marked and documented

✅ Version pinning

[ ] All module calls use explicit version pinning (no “latest”)

[ ] Git modules use ?ref= with specific tags, not branches

[ ] Registry modules use version constraints (preferably ~>)

[ ] Development/test environments may use looser pinning than production

[ ] Lock files (.terraform.lock.hcl) are versioned in Git

✅ Testing & validation

[ ] Terraform Testing Framework is implemented for all critical modules

[ ] Upgrade tests exist for major and minor updates

[ ] Tests run automatically in the CI/CD pipeline

[ ] Backwards compatibility is verified before every release

[ ] Regression tests for known bugs exist

✅ Governance

[ ] Sentinel policies enforce module versioning (Terraform Enterprise)

[ ] Deny list for faulty module versions is implemented

[ ] Version update process is documented and followed

[ ] Changes to modules go through a review process

[ ] Major updates require a formal Go/No-Go meeting

✅ Documentation

[ ] README.md explains the module’s versioning strategy

[ ] CHANGELOG.md is maintained and follows the Keep a Changelog format

[ ] Migration guides exist for all major updates

[ ] Known issues are documented

[ ] Deprecation notices are communicated at least 2 minor versions in advance

✅ Monitoring & incident response

[ ] Module versions in deployments are traceable (logs, tags)

[ ] Alerting for unexpected module updates is active

[ ] Rollback procedures are documented and tested

[ ] Incident response playbook for module issues exists

[ ] Postmortem process after failed updates is established

Final thoughts

Module versioning is not sexy. It’s not a topic that gets discussed at conferences. There are no fancy graphics or impressive demos. But it is the difference between an infrastructure that remains maintainable after three years and an unmanageable mess that nobody wants to touch.

If there’s one thing to take away from this article, it’s this: Invest in clean versioning now, or pay ten times the price later in incidents, rollbacks, and weekend callouts.

The 3 a.m. escalations may not disappear completely. But they will become much rarer. And that’s already something.

Because the only usable version is the one you can control.