The rapid adoption of cloud infrastructure has fundamentally transformed how enterprises build and manage their IT resources. As organizations increasingly embrace multi-cloud strategies and complex hybrid deployments, the challenges of maintaining security, compliance, and operational excellence have grown exponentially. At ICT.technology, we've observed that successful cloud adoption and datacenter operations require more than just technical expertise – it demands a systematic approach to infrastructure provisioning that addresses these challenges head-on. Some enterprises already learned this lesson the hard way.

When Seven Servers Brought Down a Wall Street Giant

On July 31, 2012, Knight Capital Group was riding high. As one of Wall Street's largest trading firms, they controlled a 17.3% share of NYSE trading volume and a 16.9% share of NASDAQ trades, handling nearly 4 billion shares daily. Their reputation had just been burnished by a Wall Street Letter award for their Knight Direct algorithms and Hotspot FX trading platform. Life was good.

On July 31, 2012, Knight Capital Group was riding high. As one of Wall Street's largest trading firms, they controlled a 17.3% share of NYSE trading volume and a 16.9% share of NASDAQ trades, handling nearly 4 billion shares daily. Their reputation had just been burnished by a Wall Street Letter award for their Knight Direct algorithms and Hotspot FX trading platform. Life was good.

Then came the morning of August 1, 2012. What started as a routine software deployment across eight servers would spiral into one of the most expensive 45 minutes in financial history. The problem wasn't the new code itself – it was how it interacted with an old, dormant feature that had been left behind on some servers, a digital landmine waiting to be triggered.

Prior to August 1, Knight Capital had an old piece of code called "Power Peg" that was dormant. This code was no longer used. It was disabled via a flag setting, but the underlying code was still present and... well, pretty broken.

So a new deployment of the servers was scheduled, a maintenance window in the middle of the night to avoid disruption during trading.

On July 31, 2012, they started deploying new trading software across 8 servers. However, the deployment was inconsistent:

- On 7 servers, they deployed the new code correctly.

- On 1 server, they forgot to deploy the new code.

- The new software update accidentally reactivated the old Power Peg flag.

As a result, some servers ran the new code, one ran the old code. The server with the old code continued working with mock data, but created very real trading orders.

When the market opened, chaos ensued.

Knight Capital's trading systems went haywire, firing off erroneous trades at a dizzying pace. In just 45 minutes, their automated trading system unleashed more than 4 million unintended trades into the market.

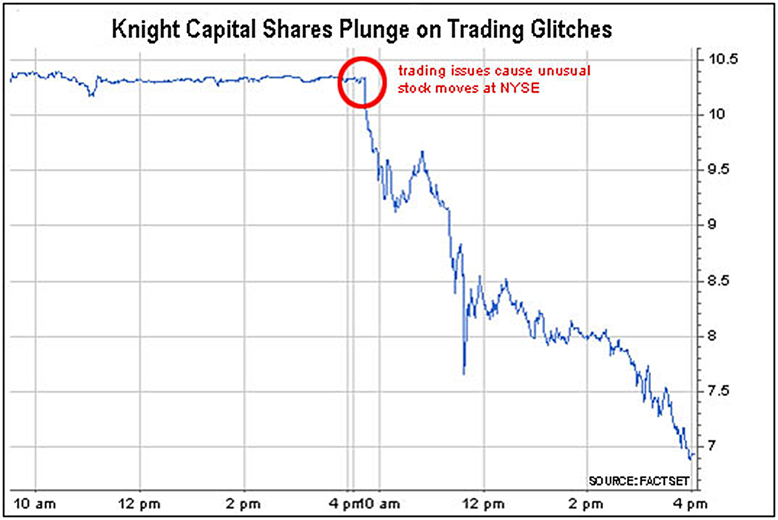

The result? A staggering $460 million loss, as reported in multiple news sources including Business Insider and Bloomberg. Knight Capital's stock price plunged 32.8% in a single day.

The aftermath was brutal. To avoid bankruptcy, the company needed an emergency $400 million capital injection. On October 15, 2013 the SEC investigation concluded with a $12 million fine for violating trading rules by failing to have adequate safeguards in place.

The irony? This catastrophe could have been prevented with proper infrastructure automation and deployment practices. Manual server configurations, inconsistent deployments, and the lack of proper testing environments created a perfect storm. Seven servers. That's all it took to bring down a company that handled nearly 4 billion shares per day.

This incident serves as a stark reminder of why modern infrastructure provisioning tools like Terraform exist. When a single mismatched configuration across a handful of servers can cost nearly half a billion dollars, the old ways of managing infrastructure through manual changes and "hope for the best" deployment strategies just don't cut it anymore.

The Knight Capital disaster wasn't just a software failure – it was a failure of infrastructure management. In today's world, where infrastructure can be defined as code, version controlled, and automatically deployed with consistent configurations across any number of servers, such a disaster would be much harder to achieve. Though I suppose if you're going to fail, failing so spectacularly that you make it into every DevOps presentation for the next one-and-a-half decades is one way to go about it.

Now, let's talk about how modern infrastructure provisioning can help prevent such disasters...

The Evolution of Infrastructure Provisioning

The Knight Capital incident serves as a powerful reminder of why infrastructure provisioning has evolved dramatically from its origins in manual server configuration. Today's enterprise environments demand sophisticated solutions that can handle the complexity of modern cloud architectures while ensuring security, compliance, and operational efficiency. Terraform has emerged as the de facto standard for infrastructure provisioning, offering a powerful declaration-based approach that transforms how we manage infrastructure.

The Knight Capital incident serves as a powerful reminder of why infrastructure provisioning has evolved dramatically from its origins in manual server configuration. Today's enterprise environments demand sophisticated solutions that can handle the complexity of modern cloud architectures while ensuring security, compliance, and operational efficiency. Terraform has emerged as the de facto standard for infrastructure provisioning, offering a powerful declaration-based approach that transforms how we manage infrastructure.

Modern infrastructure provisioning must also accommodate the reality of hybrid and multi-cloud environments. Organizations often leverage different cloud providers in addition to on-premise datacenters for specific capabilities or to meet regional requirements. Terraform excels in these scenarios by providing a unified workflow for managing resources across providers. This capability becomes particularly valuable when organizations need to maintain consistency in their infrastructure approach while taking advantage of provider-specific features.

The integration capabilities of Terraform extend beyond just cloud infrastructure. Modern enterprises require their infrastructure tools to work seamlessly with existing systems for monitoring, logging, and security. Terraform's provider ecosystem enables organizations to manage these integrations as code, ensuring that new infrastructure is automatically configured with appropriate monitoring, logging, and security controls from the moment it is created.

The Benefit of Declarative Infrastructure-as-Code

The shift from manual configurations to automated infrastructure provisioning marks a significant evolution. However, not all automation approaches are created equal. Let’s take a closer look at the difference between imperative and declarative provisioning models.

The Knight Capital incident demonstrated the risks of imperative, step-by-step infrastructure management. Terraform's declarative approach offers a fundamentally different paradigm. Rather than specifying detailed sequences of commands, organizations describe their desired end state. This shift enables engineers to focus on what they want to achieve rather than the specific commands needed to get there.

The declarative model enables Terraform to handle complex dependency management automatically, ensuring resources are created in the correct order and maintaining relationships between different components. This systematic approach helps prevent the kind of inconsistent deployments that led to the Knight Capital disaster.

| Imperative (Examples: bash scripts, Ansible Roles) | Declarative (Examples: SQL, Terraform manifests) |

|---|---|

| Defines step-by-step instructions to achieve the desired outcome. | Defines the desired end state—the system determines the steps automatically. |

| Execution is sequential. Commands must follow a strict order. You must manage dependencvies yourself. | Execution is state-driven—Terraform computes dependencies and execution order. |

| Manual intervention often required for resource lifecycle management. | Automatic resource management (creation, updates, decommissioning). |

| Typically stateless & non-idempotent, meaning rerunning the process can cause unintended changes. | Stateful & idempotent, ensuring consistent results across multiple runs. |

| Focuses on process control: You specify how to configure resources. | Focuses on outcome: You specify what the final infrastructure must look like. |

Terraform Modules: Reusable Code Shared Across the Organization

Resource management and scaling capabilities represent another critical aspect of modern infrastructure provisioning. Terraform's module system enables us to create reusable components for our customers that encapsulate best practices, security controls during the planning phase and the actual runtime, and individual business policy requirements. These modules can be shared across teams, ensuring a consistent implementation of infrastructure patterns while allowing for customization where needed. This approach significantly reduces the time required to provision new environments while maintaining state-of-the-art standards for security and compliance.

Cloud-Agnostic Architecture

Consider a typical enterprise scenario where an application requires load balancers, virtual machines, networking components, and firewall rules across multiple cloud providers. With traditional approaches, provisioning this infrastructure would require in-depth knowledge of each provider's specific tools and interfaces. While Terraform provides a consistent syntax and workflow through HCL2 (HashiCorp Configuration Language 2.0), it is crucial to understand that the underlying provider-specific code remains fundamentally different. A load balancer configuration for AWS, for instance, requires entirely different resource definitions and attributes compared to Oracle Cloud Infrastructure (OCI), Azure, or other cloud providers.

While HashiCorp's marketing materials might suggest a more seamless multi-cloud experience, the reality is that each provider requires its own specific implementation details, expertise, and ongoing lifecycle management of the modules that interface with the provider APIs. Through careful abstraction and sophisticated module design, organizations can create well-designed interfaces that provide end users with a consistent experience for resource creation and management across different cloud providers.

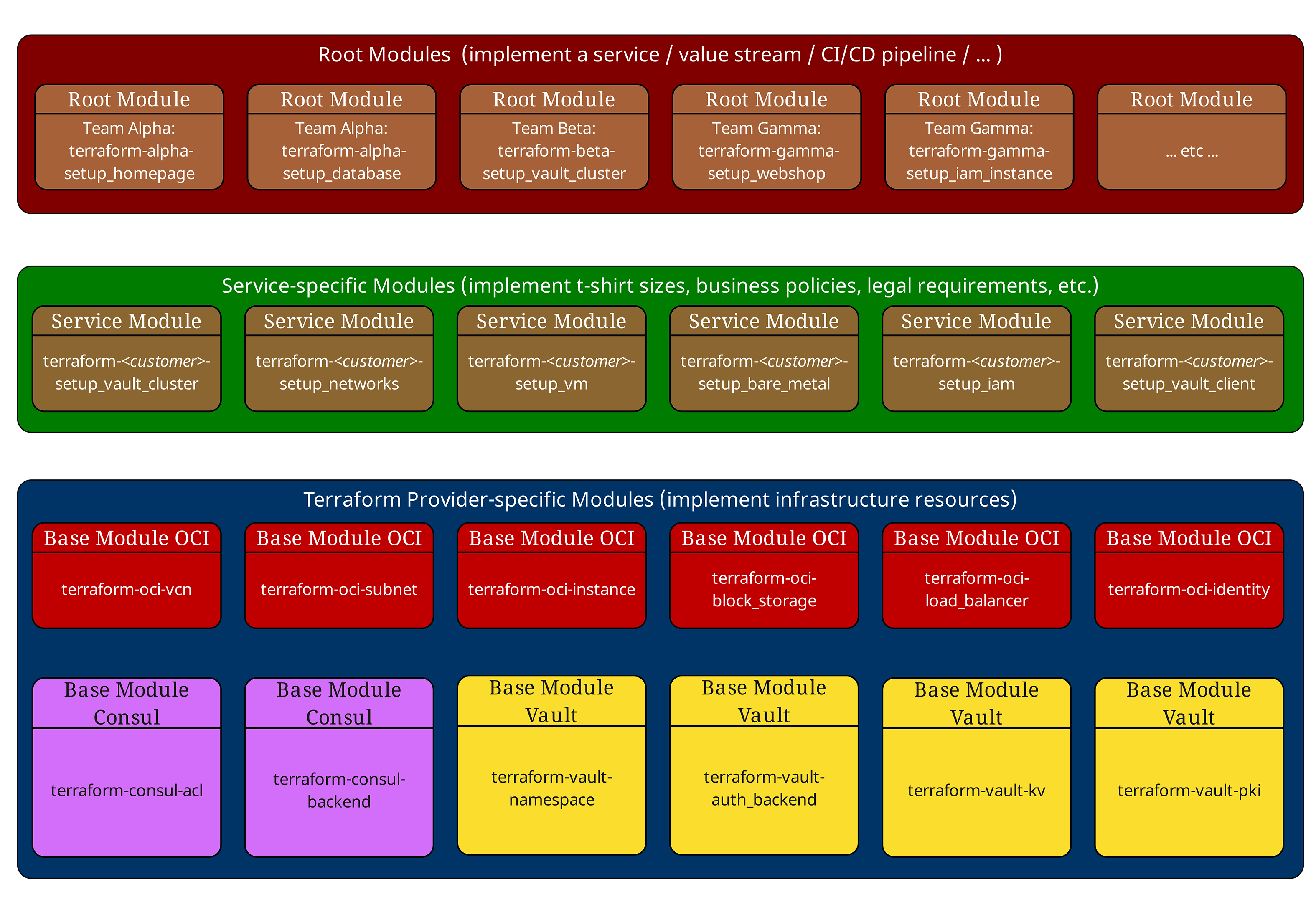

At ICT.technology, we implement this abstraction through a clear two-layer module architecture. The foundation consists of "base modules" - provider-specific modules that handle the unique requirements of each cloud platform and directly interact with the respective provider APIs. These base modules must be actively maintained and lifecycled to keep pace with provider updates and new features.

Built on top of these are "service modules" which implement the actual business services, compliance policies, and standardized configurations (such as t-shirt sizes). The key architectural principle is that service modules never interact directly with Terraform providers - they only call base modules, thus maintaining a clean separation of concerns and achieving true abstraction. This approach enables organizations to provide consistent service definitions while isolating provider-specific complexity in the base layer. On top of these provider-specific modules, organizations can build abstraction layers that present a consistent interface to end users. When properly implemented, this approach allows users to deploy resources across different environments using consistent, provider-agnostic code - but this capability must be deliberately architected and maintained, rather than being an automatic feature of Terraform itself.

State Management

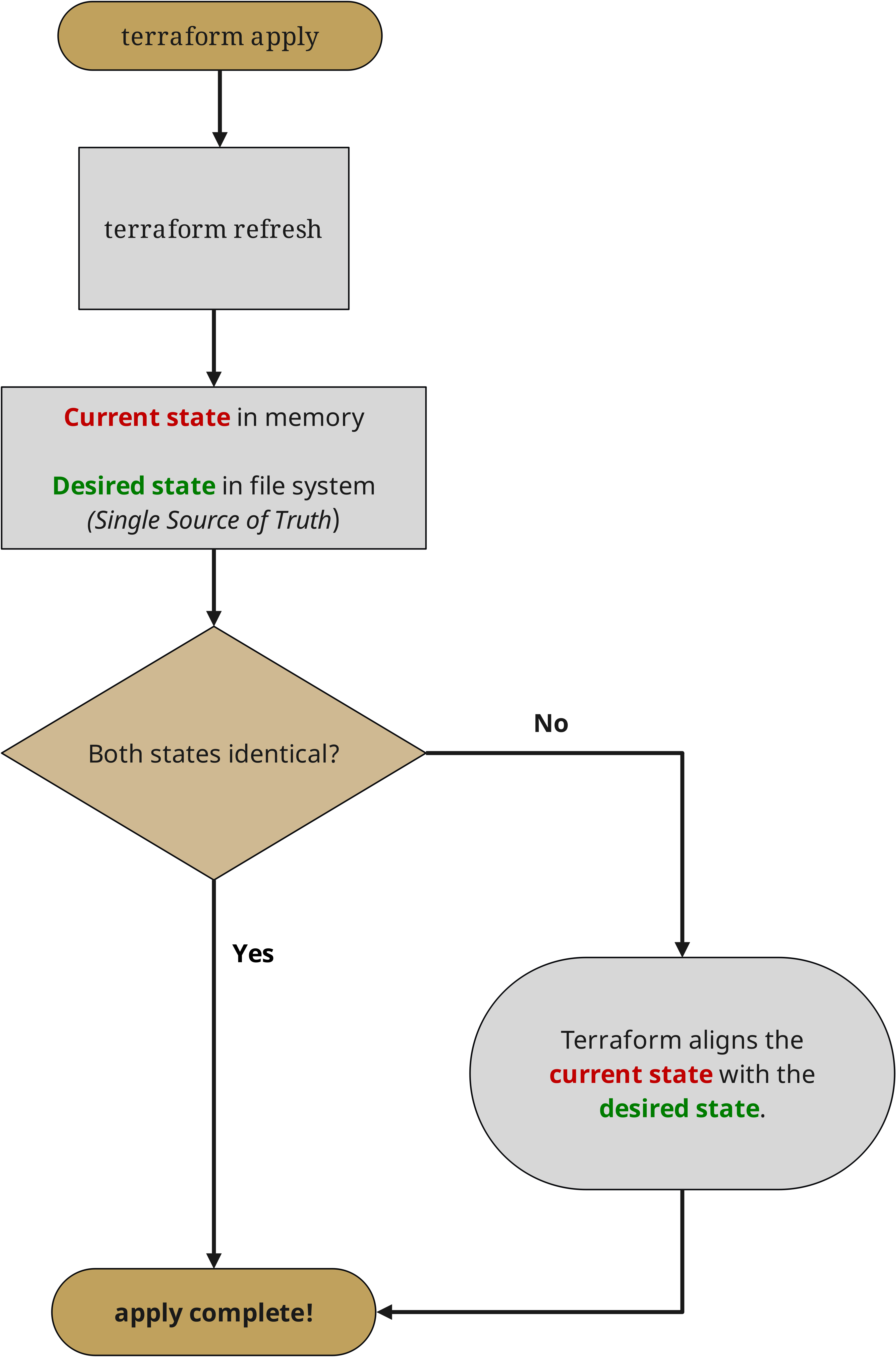

The power of Terraform becomes particularly evident in its state management capabilities. State files serve as a single source of truth for infrastructure, tracking all resources and their relationships. Both the free-to-use HashiCorp Terraform and Terraform Enterprise support remote state management through various backends, though they differ in implementation effort and operational responsibility.

HashiCorp Terraform includes built-in support for remote state through the terraform_remote_state data source, and offers state locking capabilities with supported backends like Consul to allow teamwork, as well as through storage solutions like Amazon S3 which are not officially supported by HashiCorp. The key difference lies in the implementation effort and operational responsibility: with the free-to-use HashiCorp Terraform, organizations must implement and maintain their own state management infrastructure, including backup procedures, state dependency management and corruption prevention measures. Terraform Enterprise provides these capabilities as managed services with integrated security controls, compliance enforcement and detailed audit logging.

Room for Improvements: Deployment Strategies

While Terraform excels at infrastructure provisioning, organizations often need more sophisticated deployment strategies such as blue-green deployments or canary releases. These patterns typically require additional tooling and orchestration beyond Terraform's core capabilities. When a resource needs to be re-provisioned, Terraform's default behavior is to destroy the existing resource before deploying the new version, which can lead to service interruptions.

Organizations frequently combine Terraform with complementary tools like HashiCorp Consul, HashiCorp Nomad, and application deployment platforms to achieve more sophisticated deployment patterns. Through careful state management and infrastructure versioning, Terraform handles the infrastructure components while other tools manage traffic routing and application deployment aspects.

Addressing Critical Risks in Cloud Infrastructure

As organizations scale their cloud infrastructure, they face several critical risks that must be carefully managed. Understanding these risks and implementing appropriate controls through infrastructure provisioning is essential for maintaining a secure and compliant environment.

Securing Cloud Infrastructure Through Code

Security vulnerabilities in cloud infrastructure often stem from inconsistent implementation of security controls and lack of standardization across environments. Traditional approaches to security, which relied heavily on manual configuration and point-in-time audits, struggle to keep pace with the rapid changes typical in cloud environments.

Security vulnerabilities in cloud infrastructure often stem from inconsistent implementation of security controls and lack of standardization across environments. Traditional approaches to security, which relied heavily on manual configuration and point-in-time audits, struggle to keep pace with the rapid changes typical in cloud environments.

Terraform provides a robust foundation for implementing security-as-code practices through its deep integration with the broader HashiCorp tools ecosystem and other enterprise security solutions. HashiCorp Vault serves as a centralized secrets management and data protection platform that can be both configured and leveraged by Terraform.

Through Terraform, organizations can automate the setup of Vault's secret engines, authentication methods, and access control policies. This enables

- the automated management of secrets,

- encryption,

- and identity-based access controls

across the entire infrastructure. For example, Terraform can configure Vault to handle dynamic database credentials, integrate with cloud provider key management services, manage PKI infrastructure and require Multi-Factor-Authentication (MFA) before any changes to the infrastructure are applied - all while ensuring no sensitive data is stored in the Terraform code itself.

Identity and access management represents a critical component of infrastructure security. Organizations commonly use Keycloak as their identity provider, which can be fully managed through Terraform. This includes automating the setup of realms, clients, roles, and groups, as well as configuring federation with enterprise identity systems.

Terraform can also manage the integration between Keycloak and other security tools like Vault, creating a cohesive identity and access management framework. HashiCorp Boundary can provide additional identity-based access control and SSH auditing capabilities, but we at ICT.technology do not consider Boundary as being ready for production unless you already use a very strongly secured and highly available PostgreSQL database production cluster in your company's trust center. PostgreSQL is a mandatory requirement of HashiCorp Boundary at the time of writing this article, which is often not a realistic scenario in enterprise-grade environments with high security standards, supply chain requirements and mandatory support contracts with a manufacturer.

The practical implementation of security controls in Terraform requires a comprehensive tooling approach. Pre-commit hooks in version control systems can perform initial security validations before code is even committed. Static analysis tools integrated into CI/CD pipelines can scan Terraform configurations for security misconfigurations, potential data exposures, and compliance violations. Tools like checkov provide automated security and compliance testing specifically designed for Terraform code.

These tools can be configured to enforce organization-specific security policies and provide security insights during the infrastructure deployment process. For static code analysis and security scanning, organizations commonly use tools like tfsec for security best practice scanning and HashiCorp Sentinel for policy-as-code enforcement and compliance verification. While Terraform itself doesn't directly configure security monitoring systems, it can set up the required infrastructure and integrations for security tooling. For example, Terraform can configure log forwarding to SIEM systems like Splunk or the ELK Stack, set up required networking, and manage access permissions.

Runtime security monitoring in enterprise environments requires careful integration between infrastructure deployment and security systems. Terraform can configure enterprise monitoring solutions through official providers, such as Datadog for infrastructure monitoring and Palo Alto Prisma Cloud for cloud security. The ServiceNow provider enables basic configuration management integration, though complex enterprise integrations often require additional orchestration tools.

Security automation tools play a crucial role in maintaining continuous compliance and security posture. Configuration validation tools can enforce security standards during the Terraform planning phase through custom rule engines. Secret scanning tools integrate with version control systems to prevent accidental commit of sensitive data. Policy-as-code frameworks enable organizations to define and enforce security policies consistently across all infrastructure deployments. These tools work together to create a comprehensive security automation pipeline that validates infrastructure changes at multiple stages, from initial development through to production deployment.

Preventing Configuration Drift and Misconfigurations

Configuration drift represents one of the most significant operational risks in cloud environments. As systems evolve and teams make changes, maintaining consistency between the intended and actual state of infrastructure becomes increasingly challenging. Misconfigurations can lead to security vulnerabilities, compliance violations, and operational issues.

Configuration drift represents one of the most significant operational risks in cloud environments. As systems evolve and teams make changes, maintaining consistency between the intended and actual state of infrastructure becomes increasingly challenging. Misconfigurations can lead to security vulnerabilities, compliance violations, and operational issues.

Terraform's state-based approach provides a powerful solution to configuration drift. By maintaining a detailed record of the intended state of infrastructure and regularly comparing it against the actual state, Terraform can identify and correct drift automatically. This capability extends beyond basic resource configurations to include security settings, tags, and other metadata that are crucial for maintaining proper governance.

Configuration drift can be detected through regular Terraform plan operations, which compare the current state with the desired configuration. While HashiCorp Terraform requires manual execution of these operations and additional scripting for automation, Terraform Enterprise provides built-in features for automated drift detection and remediation workflows. Organizations using the free-to-use HashiCorp Terraform typically build their own automation around regular plan executions using CI/CD pipelines and custom scripts. This might involve scheduling plan operations and implementing notification systems to alert teams when drift is detected. The actual remediation of drift, regardless of the Terraform version used, requires careful consideration of potential service impacts and, in more severe cases, might need human review before applying changes.

The secure management of Terraform's state files represents another critical aspect of the security toolchain. Organizations which use the free version of Terraform typically implement a secure HashiCorp Consul backend for storing state files, in Terraform Enterprise this feature is integrated. Version control systems track changes to infrastructure definitions, while separate audit logging tools maintain detailed records of all infrastructure modifications. Tools like GitLab CI or Jenkins can be configured to enforce approval workflows for infrastructure changes, ensuring that security reviews occur before any modifications are applied.

Ensuring High Availability and Minimizing Downtime

Downtime and service failures can have severe impacts on business operations and customer satisfaction. Modern infrastructure provisioning must incorporate robust strategies for maintaining availability while enabling necessary changes and updates.

Terraform's sophisticated state management and dependency tracking enable organizations to implement complex deployment patterns that minimize downtime. Blue-green deployments, for example, can be orchestrated through Terraform to create new infrastructure alongside existing resources, validate the new environment, and configure load balancers to transition traffic gradually and minimize risk. After all traffic is redirected to the new environment and the new environment can also be considered stable, Terraform can decommission the resources which are no longer utilized in the old environment to reduce costs.

The platform's ability to handle complex dependencies ensures that resources are created and updated in the correct order, reducing the risk of service disruptions during changes. Organizations can implement validation checks of values which are provided by users, runtime checks of dynamically created values, and automated testing via the integrated Terraform Testing Framework as part of their infrastructure provisioning workflow, catching potential issues as early as possible in the feedback loop, and before they impact production environments.

Maintaining Continuous Compliance

Compliance requirements continue to evolve and become more complex, particularly for organizations operating in regulated industries or across multiple jurisdictions. Traditional approaches to compliance, which often relied on periodic audits and manual reviews, struggle to provide the continuous assurance required in modern cloud environments.

Terraform enables organizations to implement compliance-as-code practices by allowing compliance requirements to be translated into infrastructure specifications that can be version-controlled. However, the implementation approach differs significantly between the free-to-use HashiCorp Terraform and Enterprise versions. With HashiCorp Terraform, organizations typically rely on a combination of carefully crafted modules, custom validation rules in variable definitions, and external tools for policy enforcement. This might involve implementing pre-commit hooks, custom validation scripts, and CI/CD pipeline checks to ensure compliance requirements are met.

Terraform Enterprise provides built-in policy-as-code capabilities through Sentinel, which allows for sophisticated compliance checks before applying changes. Without Enterprise, organizations often implement similar capabilities using tools like Open Policy Agent (OPA), custom scripts, or CI/CD pipeline validations. These external tools can enforce requirements like resource naming conventions, security configurations, and data sovereignty requirements, but require additional setup and maintenance, and are less powerful than the integrated approach available in Terraform Enterprise.

The Terraform Enterprise platform's comprehensive audit capabilities provide detailed records of all infrastructure changes, including

- who made the change,

- when it was made, and

- what specifically was modified.

This audit trail is invaluable for demonstrating compliance during audits and investigating security incidents.

Looking Forward

As cloud infrastructure continues to evolve, the role of infrastructure provisioning in maintaining security, compliance, and operational excellence becomes increasingly critical. Organizations must adopt sophisticated approaches that can handle the complexity of modern cloud environments while ensuring consistent security and compliance controls.

As cloud infrastructure continues to evolve, the role of infrastructure provisioning in maintaining security, compliance, and operational excellence becomes increasingly critical. Organizations must adopt sophisticated approaches that can handle the complexity of modern cloud environments while ensuring consistent security and compliance controls.

Terraform's declarative approach, combined with its powerful state management and provider ecosystem, provides a robust foundation for addressing these challenges. By implementing infrastructure-as-code practices and leveraging advanced features for security and compliance management, organizations can build resilient cloud infrastructure that meets their business needs while maintaining high standards for security and compliance.

The future of infrastructure provisioning will likely see even greater emphasis on security automation, compliance automation, and intelligent orchestration. Organizations that invest in building robust infrastructure provisioning capabilities today will be well-positioned to handle these evolving requirements while maintaining the agility needed to compete in rapidly changing markets.