Target, one of the largest retailers in the USA with over 1,800 stores, faced a complex challenge: orchestrating workloads across multiple environments - from the public cloud to its own data centers and edge locations in stores. Kubernetes was already in use in some areas but was too complex and too expensive in terms of overall operational costs. The decision was ultimately made in favor of HashiCorp Nomad, which led to a significant acceleration of development cycles and a simplification of the infrastructure. This success story highlights a recurring pattern in the industry: companies are increasingly recognizing the value of lean, efficient orchestration solutions that focus on the essentials.

Container orchestration is becoming a critical component of modern IT infrastructures. As more companies modernize their application landscapes and transition to cloud-native architectures, they face the challenge of efficiently managing an increasing number of workflows. However, many organizations have found that common orchestration solutions, with their complexity, high resource requirements, and steep learning curves, hinder operational efficiency rather than support it. The logical consequence is higher operating costs, longer development cycles, and an excessive burden on IT teams.

Moreover, orchestrating containers alone is often not enough. Workloads running on servers (whether virtual or bare metal), applications in the form of executables, batch jobs, or even Java services would require additional orchestration solutions. This further increases infrastructure complexity. As a result, many companies are searching for leaner, more efficient alternatives for workload orchestration.

Spotlight on HashiCorp Nomad.

Nomad has established itself as an elegant and powerful alternative for workload orchestration. Unlike Kubernetes, Nomad follows a pragmatic approach: a single binary, simple APIs, and an intuitive architecture allow teams to focus on their actual tasks instead of getting lost in managing the orchestration platform itself. And with Nomad, it does not matter whether workloads are containerized or not.

Why Simplicity Can Be Better: The Nomad Approach

Orchestrating workloads in modern enterprise environments requires a balanced approach between functionality and complexity. Target’s experience clearly illustrates this: the company needed to operate applications in Google Cloud, its own data centers, and over 1,800 stores. The challenge was to efficiently and centrally orchestrate these diverse environments without unnecessarily increasing complexity.

Orchestrating workloads in modern enterprise environments requires a balanced approach between functionality and complexity. Target’s experience clearly illustrates this: the company needed to operate applications in Google Cloud, its own data centers, and over 1,800 stores. The challenge was to efficiently and centrally orchestrate these diverse environments without unnecessarily increasing complexity.

The decision to use Nomad was based on several key factors that proved crucial in practice.

1. A central aspect was consistency: Since Target was already using Docker containers with Kubernetes in its stores, the ability to reuse these same containers across other environments was highly valuable. This eliminated the need to create separate RPM packages or other deployment formats.

2. The speed of deployments also played an important role. Target’s development teams needed the ability to quickly roll out and test new versions, especially during the critical period from the start of the Black Friday season to Christmas. With Nomad, they could deploy changes within minutes, compared to the 30-minute or longer deployment cycles of their previous RPM- and Docker-based processes.

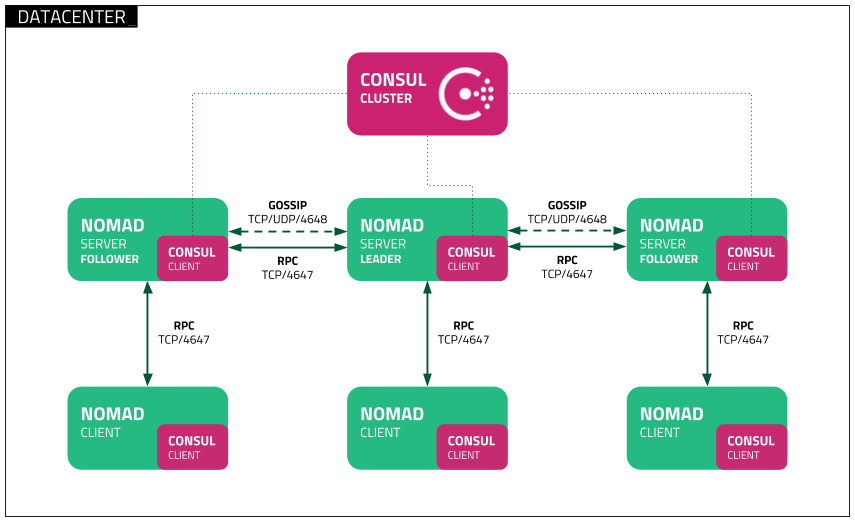

3. The native integration with the existing HashiCorp stack proved to be another decisive advantage. Target already had a functional infrastructure with Consul for service discovery and DNS, as well as Vault for secrets management. Nomad’s seamless integration into this ecosystem allowed the team to continue leveraging these proven components.

4. Multi-region support was essential for Target’s distributed infrastructure. With on-premise data centers in Minnesota and cloud presence in the US-Central and US-East regions, the team needed the ability to deploy workloads company-wide and per region, regardless of location, while maintaining federated configuration and monitoring.

5. Finally, integration with Terraform was a key factor. Target’s teams were already using well-established deployment pipelines based on Terraform, and the ability to retain and extend these for Nomad deployments significantly simplified adoption.

Resource Optimization and Mixed Workloads

Efficient resource utilization is crucial for companies working with diverse workloads. Nomad offers a powerful orchestration solution that enables optimized resource usage and flexible handling of mixed workloads.

Optimal Use of Resources

Nomad utilizes an efficient bin-packing algorithm that optimally distributes server resources by intelligently placing smaller tasks into available free capacity. This reduces unused resources and maximizes cluster efficiency. In high-performance computing environments or companies with highly variable workloads, Nomad ensures that expensive hardware is fully utilized.

- Dynamic Resource Allocation: Workloads are placed based on real-time demands to avoid idle times.

- Increased Efficiency: Intelligent job placement reduces hardware requirements, lowering operational costs.

- Load Balancing: Workloads can be automatically shifted to less utilized nodes to ensure even system utilization.

Support for Mixed Workloads

One of Nomad’s strengths is its ability to orchestrate various types of workloads in parallel. While Kubernetes is primarily designed for containerized applications, Nomad can also manage standalone applications, batch jobs, microservices (containers), and mission-critical processes.

- Batch Processing & HPC Workloads: Ideal for research institutes or companies with data-intensive computations requiring short-term high computing power.

- Microservices & Cloud-Native Applications: Modern, distributed architectures benefit from Nomad’s simple API and declarative configuration.

- Legacy Applications & System Processes: Unlike Kubernetes, non-containerized workloads can also be efficiently orchestrated.

Simplification of Operations

Nomad simplifies operations through a minimalist architecture with a single binary and no external dependencies. This reduces administrative overhead and makes it particularly easy for smaller IT teams to operate a powerful orchestration solution.

With this combination of high resource efficiency and flexible workload support, Nomad provides a versatile platform suitable for both dynamic, modern cloud environments and traditional IT infrastructures.

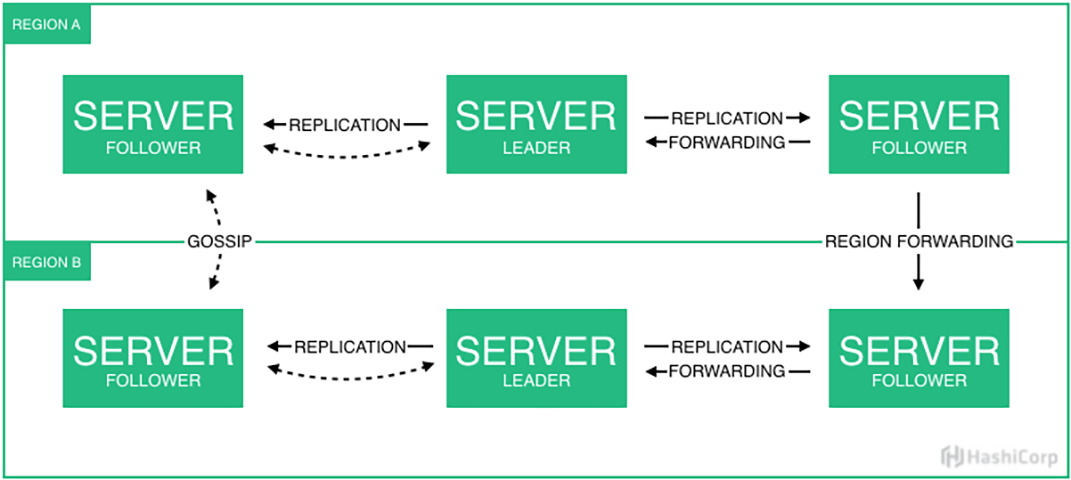

Enterprise-Scale Scheduling and Bin Packing

Nomad’s scheduling engine fundamentally differs from other orchestration solutions due to its pragmatic and efficient approach. At its core, Nomad uses a distributed scheduler based on the Raft consensus protocol. This enables fast and reliable scheduling decisions across a cluster. Target implemented this architecture with three-node clusters per region, where one server acts as the leader and the other two serve as followers, ready to take over in case of failure. A five-node cluster is even more ideal for scenarios requiring maximum high availability: these five nodes can be distributed across different fault domains or data centers and remain fully operational even if the current cluster leader unexpectedly fails. The remaining followers can then elect a new leader - something that would not be possible in a three-node cluster due to the lack of quorum.

What makes Nomad’s scheduler particularly noteworthy is its advanced bin-packing algorithm, which we briefly mentioned in the previous section. While many orchestration solutions rely on simple placement strategies that often lead to inefficient resource distribution, Nomad employs a multidimensional approach. This simultaneously considers CPU, memory, network ports, and even custom resources. At Target, this resulted in significantly higher resource efficiency: with just eight nodes per cluster, the company was able to efficiently operate a wide range of workloads without the excessive over-provisioning of underlying hardware and network infrastructure often seen with other platforms like VMware or Kubernetes.

Scheduling decisions are based on a sophisticated system of constraints and affinities. Developers can define precise requirements for their workloads, such as hardware specifications, network topology, or geographic location. The scheduler takes these constraints into account along with the current state of the cluster to make optimal placement decisions. At Target, this was particularly crucial for managing multi-region deployments between their data centers in Minnesota and the Google Cloud regions.

A concrete example from Target’s implementation demonstrates the scheduler’s capabilities: the company runs a full ELK stack (Elasticsearch, Logstash, Kibana) on Nomad, processing around 300GB of logs daily. This showcases the scheduler’s flexibility in handling different workload types - from stateless applications to complex, stateful systems like Elasticsearch. The scheduler automatically recognizes the specific requirements of these different workloads and places them optimally within the available infrastructure.

Another standout feature of the scheduler is its ability to handle preemptible instances. Target is experimenting with this feature in its staging environment, allowing them to use cost-effective, interruptible cloud instances while the scheduler ensures continuous service operation. This demonstrates how Nomad’s scheduling engine not only delivers technical efficiency but also enables direct cost savings.

Enterprise-Grade Features

Nomad Enterprise extends the open-source version of Nomad with additional features specifically designed to meet the needs of large enterprises. Especially in complex and distributed environments, Nomad Enterprise demonstrates its strengths, enabling companies to achieve even greater scalability, security, and governance.

Governance and Multi-Cloud Management

One of the biggest challenges for enterprises with globally distributed infrastructures is the efficient management of clusters across multiple regions and cloud providers. Nomad Enterprise provides a powerful federated cluster architecture for this purpose:

- Federated Clusters Across Multiple Regions and Cloud Providers: Companies can operate multiple Nomad clusters in different regions or cloud environments and manage them centrally. For example, one cluster might run on-premise, another in Oracle Cloud Infrastructure (OCI) in Frankfurt across three data centers, and another in Amazon Web Services (AWS). Together, they form a unified application pool. This enables flexible workload distribution based on geographic proximity, application-specific resource and latency requirements, software licensing conditions, or overarching compliance regulations.

- Independent Deployment Control per Region: Each region can operate and be managed independently, ensuring that a failure or issue in one region does not impact other clusters. At the same time, global policies can be centrally managed. This means that an on-premise Nomad cluster can also deploy and manage workloads in OCI or AWS. Data is replicated between the local data center and cloud environments, with exceptions for tokens, regional ACL policies, and Sentinel policies enforcing business rules (which we will discuss in a later section).

- Automated Validation and Deployment Processes: Nomad Enterprise allows deployments to be automatically validated and rolled out in production environments. This reduces manual errors and increases release reliability.

High Availability and Scalability

For mission-critical applications, high availability is essential. Nomad Enterprise provides additional features that enhance the resilience and scalability of Nomad clusters:

- Three-Node or Five-Node Clusters per Region for Fault Tolerance: To ensure high availability, Nomad servers are operated in clusters with at least three nodes per region. This ensures that even if one or more servers fail, the remaining nodes can seamlessly continue operations.

- Automatic Consensus for Scheduling Decisions: Nomad leverages the Raft consensus protocol to make scheduling decisions reliably and efficiently across a cluster. In the event of a leader failover, a new leader is automatically elected to maintain continuous availability.

- Flexible Scaling of Client Nodes: Nomad can dynamically scale client nodes based on workload demand, allowing enterprises to optimize resource utilization and reduce operational costs.

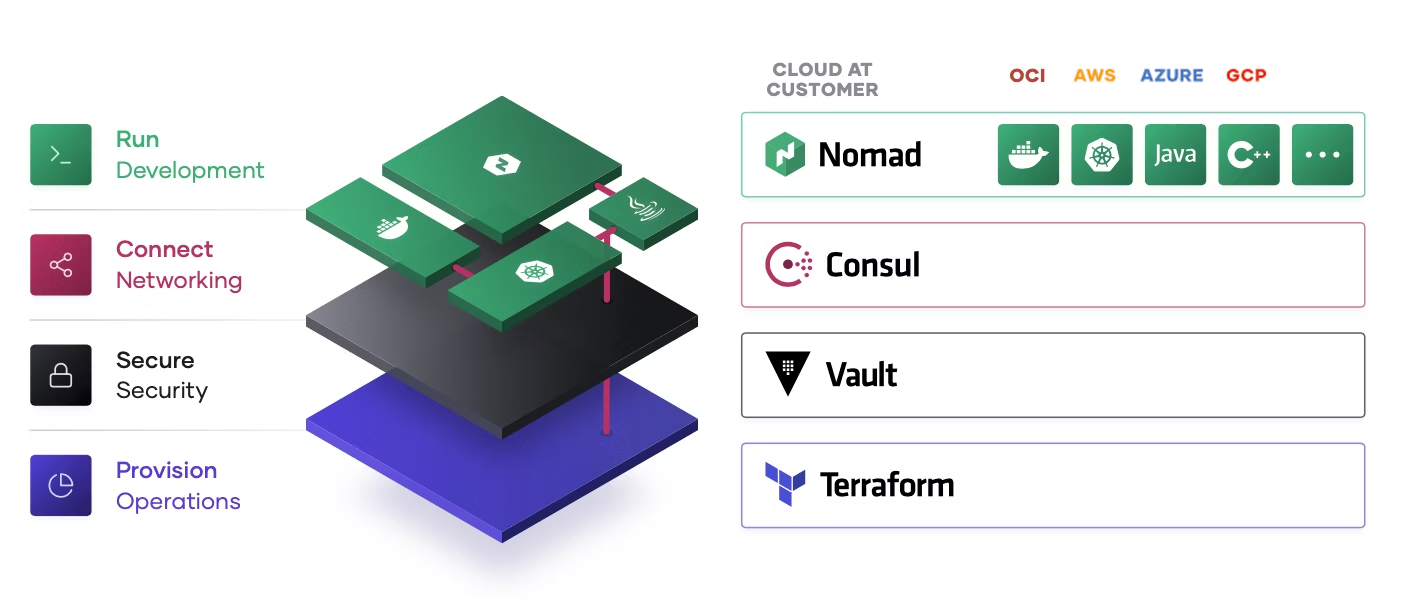

Implementation of Policies and Regulatory Compliance

In regulated industries such as finance, healthcare, or public administration, strict policies for security and compliance adherence are essential. Nomad Enterprise provides a powerful solution for policy enforcement through the integration of HashiCorp Sentinel, allowing organizations to define and automate rules directly within the orchestration process. This enables efficient enforcement of regulatory requirements and internal security policies. Overall, businesses benefit from an automated, scalable policy validation solution that seamlessly integrates into existing workflows and compliance strategies. Sentinel is not only integrated into Nomad but also into Terraform, Vault, and Consul, extending Policy-as-Code implementation across all infrastructure layers.

Sentinel: Policy Enforcement for Nomad Enterprise

Sentinel is a policy-based framework that allows administrators to define fine-grained policies as code and enforce them automatically through Nomad. Sentinel policies build on Nomad’s existing Access Control List (ACL) system, enabling precise control over job submission and execution.

Sentinel is a policy-based framework that allows administrators to define fine-grained policies as code and enforce them automatically through Nomad. Sentinel policies build on Nomad’s existing Access Control List (ACL) system, enabling precise control over job submission and execution.

Key Features of Sentinel in Nomad Enterprise:

- Fine-Grained Policy Control: Sentinel policies can specify which jobs can be executed and when, enforce the use of approved container images, and ensure compliance with other security requirements.

- Declarative Policy Structure: Policies are defined using the Sentinel language, optimized for readability and rapid evaluation. The complexity of the policies can be freely adjusted but should be designed to avoid performance impact.

- Detailed Object Utilization: Sentinel policies can access various objects within the Nomad ecosystem, including job definitions, existing jobs, ACL tokens, and namespaces. This allows for differentiated validation and enforcement mechanisms.

Management and Enforcement of Sentinel Policies

Sentinel policies are managed via the Nomad CLI, offering different enforcement levels:

- Advisory Policies: These generate warnings when a job violates a policy but do not block execution.

- Soft-Mandatory Policies: Jobs that violate a policy can still be executed but require explicit approval.

- Hard-Mandatory Policies: Jobs that do not comply with defined policies are strictly blocked and cannot be executed.

For example, a policy can be applied as follows:

nomad sentinel apply -level=soft-mandatory compliance-policy policy.sentinel

Practical Example: Restricting Job Executions

A common scenario is ensuring that only specific job drivers can be used to minimize security risks. A Sentinel policy could, for example, enforce that only the "exec" driver (used for orchestrating executables) is allowed and prevent jobs from being started with unauthorized drivers such as "docker" or "raw_exec":

main = rule { all_drivers_exec }

all_drivers_exec = rule {

all job.task_groups as tg {

all tg.tasks as task {

task.driver is "exec"

}

}

}

Terraform Integration for Sentinel Policies

Terraform can be used to automate policy management. With the nomad_sentinel_policy resource type, Sentinel policies can be directly integrated into Infrastructure-as-Code processes.

resource "nomad_sentinel_policy" "enforce_exec_driver" {

name = "restrict-to-exec"

policy = file("restrict-to-exec.sentinel")

enforcement_level = "hard-mandatory"

}

This ensures that the desired policy is applied consistently across the organization.

Comparison of Nomad and Kubernetes

Nomad stands out for its simplicity, flexibility, and efficiency, while Kubernetes excels with its advanced networking, storage, and monitoring capabilities. Companies should evaluate which solution best suits their infrastructure and use cases. While Kubernetes is widely regarded as the standard for container orchestration, more and more organizations are adopting HashiCorp Nomad as a leaner alternative that is less complex and supports a broader range of workload types.

Features and Benefits of Nomad

Nomad offers several advantages, making it particularly appealing to companies looking for a simple, flexible, and resource-efficient orchestration solution:

- Ease of Setup: Nomad is faster and easier to deploy than Kubernetes, as it requires only a single binary for clients and servers and has a lower configuration complexity.

- Flexible Workload Support: While Kubernetes primarily focuses on containerized applications, Nomad, as mentioned earlier, can efficiently manage non-containerized workloads and legacy systems.

- Operational Simplicity: Nomad's architecture is more lightweight and requires less administrative overhead, allowing teams to focus more on application development rather than platform management.

- Lower Organizational Resource Requirements: The simplified operation naturally leads to lower staffing needs. Kubernetes is a knowledge- and personnel-intensive platform, which typically only justifies its deployment at a significant scale. Many customers require additional personnel just to maintain Kubernetes clusters and analyze incidents. The organizational burden on a company is significantly lower with Nomad.

- Lower Resource Consumption: Nomad consumes fewer system resources than Kubernetes, making it an ideal solution for edge computing, IoT environments, and resource-constrained systems. Combined with the reduced personnel requirements, infrastructure costs are noticeably lower, positively impacting total cost of ownership (TCO) and return on investment (ROI).

- Seamless Integration with the HashiCorp Ecosystem: Through its tight integration with HashiCorp Consul for service discovery and Vault for secrets management, companies can fully leverage their existing HashiCorp stacks.

- Built-in Multi-Region Support: Nomad natively supports multi-region and multi-cloud deployments, whereas Kubernetes requires additional complex configurations and management layers for such setups.

Challenges

Despite its many advantages, there are also challenges when using Nomad, especially in comparison to Kubernetes:

- Load Balancing: Kubernetes has built-in load balancing through kube-proxy and Ingress. Nomad, on the other hand, requires external solutions for this functionality. A popular choice is Traefik, which integrates directly with Nomad. For organizations seeking unified support, HashiCorp Consul is the preferred solution.

- Network and Policy Management: Kubernetes provides powerful networking capabilities, including Network Policies for traffic control. Nomad requires integrations with HashiCorp Consul, third-party tools, or HashiCorp Sentinel, though Sentinel is only available in the Enterprise version.

- Service Mesh Support: Kubernetes can be extended with service meshes such as Istio or Linkerd. While Nomad integrates with Consul, it does not natively offer the full functionality of a Kubernetes-based service mesh.

- Monitoring and Logging: Kubernetes includes built-in solutions for monitoring and logging. Nomad primarily relies on external tools for observability and logging.

- Configuration Approach: Kubernetes follows a declarative configuration model, making it easier to manage at scale. Nomad uses a procedural approach, offering more granular control but potentially becoming challenging in highly complex environments.

- Persistent Storage Solutions: Kubernetes provides a broad range of persistent storage options, including local, cloud, and network storage. Nomad delegates storage management to the operating system or the container runtime, such as Docker, which may add complexity in hybrid or large-scale environments.

This comparison highlights that while Nomad excels in simplicity, flexibility, and efficiency, Kubernetes stands out with its extensive built-in networking, storage, and monitoring capabilities.

Practical Benefits for Development Teams

The experiences of Target and other HashiCorp customers show that Nomad not only simplifies infrastructure management but also brings significant benefits to development teams. The following improvements have proven particularly valuable in day-to-day operations:

- Accelerated Development Cycles: With Nomad, new services can transition much faster from the proof-of-concept (PoC) phase to production. The ability to deploy and test workloads effortlessly has drastically reduced the time-to-market for new features and applications.

- Simplified Deployments: By using Nomad, deployment times have been reduced from hours to minutes. This is especially beneficial during peak demand periods, such as before the Black Friday season in retail, enabling companies to roll out changes in real time without disrupting operations or facing resource constraints.

- Improved Resource Utilization: Thanks to Nomad’s efficient bin-packing algorithm, organizations can optimize server utilization, mix different architectures and server types, and significantly reduce overall hardware requirements. This leads to substantial and measurable infrastructure cost savings.

- Flexible Workload Support: While Kubernetes is primarily optimized for containerized applications, Nomad allows the orchestration of diverse workload types - from traditional Java applications to modern microservices. Target was able to establish a unified platform for all workloads without needing additional orchestration solutions.

- Blue-Green Deployments: To minimize downtime during updates, Target uses blue-green deployments at the data center level. New versions are deployed alongside the existing version and only made live after successful testing. If issues arise, the system can instantly revert to the previous version.

These features and improvements demonstrate that Nomad Enterprise is a powerful yet straightforward solution for companies seeking a modern, highly available, and cost-efficient orchestration platform.

Integration with the HashiCorp Stack

One of Nomad's strengths is its seamless integration with other HashiCorp tools. Target and other customers with critical infrastructures leverage these synergies extensively:

- Consul for service discovery and dynamic configuration

- Vault for secrets management and dynamic credentials

- Terraform for Infrastructure as Code and automated deployments

This integration enables a fully automated pipeline where developers can submit changes via Git, which are then automatically validated and deployed through CI/CD processes.

Example: Automated Deployment Pipeline

Developers work in a Git-based environment where all changes to applications and infrastructure code are versioned. The typical workflow follows these steps:

- Code Change & Commit: A developer pushes a new version of an application to a Git repository. This could be a simple update to a microservice API or a configuration adjustment.

- Automated CI/CD Pipeline Execution: A build system such as GitHub Actions or Jenkins detects the change and triggers a pipeline that includes multiple steps:

- Build & Test: The application is compiled with the latest changes and packaged as a container image or binary.

- Security Scan & Secrets Fetching: Vault provides dynamic secrets, ensuring that credentials are not hardcoded or stored in pipelines.

- Infrastructure Validation: Terraform ensures that all required infrastructure components are correctly provisioned.

- Deployment with Nomad:

- Once the build is successful, a new Nomad job file is generated or updated.

- The Nomad CLI or a Terraform-Nomad combination is used to register and roll out the new job.

- Consul automatically handles service discovery, allowing new instances to be instantly recognized by other services.

- Blue-Green Deployment & Traffic Switching:

- With Nomad’s rolling deployment strategy, the new version runs parallel to the existing one.

- If all health checks pass, traffic is redirected to the new version using Consul load balancing or Traefik.

- If issues arise, an automatic rollback is executed.

- Monitoring & Optimization:

- Prometheus or other observability tools collect deployment metrics, which can be accessed via Nomad’s integrated REST API.

- If necessary, resources can be dynamically adjusted using Terraform and Nomad’s auto-scaling capabilities.

Benefits of This Pipeline

- Full Automation: No manual deployments, minimizing errors and accelerating the process.

- High Security: Vault ensures credentials are never stored in the codebase or CI/CD pipeline.

- Fast Rollbacks & Low Risk: Thanks to blue-green deployments and Consul-based service discovery, faulty versions can be rolled back without downtime.

- Efficient Resource Utilization: Terraform optimizes infrastructure provisioning, while Nomad’s bin-packing algorithm ensures optimal server usage.

This workflow enables organizations to deploy new versions within minutes and dynamically adapt their infrastructure to changing requirements – a crucial advantage in highly dynamic environments.

Outlook: The Future of Workload Orchestration

The future of IT infrastructure will increasingly be shaped by edge computing, multi-cloud strategies, and hybrid workloads. HashiCorp’s roadmap for Nomad highlights several promising developments:

The future of IT infrastructure will increasingly be shaped by edge computing, multi-cloud strategies, and hybrid workloads. HashiCorp’s roadmap for Nomad highlights several promising developments:

- NVIDIA multi-instance GPU support, Golden job versioning, NUMA, and device quotas (already introduced in Nomad 1.9)

- Infrastructure Lifecycle Management (ILM) to simplify infrastructure management using Terraform, Packer, and Waypoint

- Dynamic Node Metadata to enhance flexibility in managing Nomad environments

- Workload Identities to improve security through expanded identity management and integration with Vault and Consul

Nomad offers a powerful yet pragmatic solution for modern workload orchestration. Companies investing in efficient, scalable orchestration solutions today will benefit from lower operational costs, faster development cycles, and higher team productivity.

Contact us for more information! We are happy to analyze your specific use case, develop a tailored scenario, and advise you on selecting the optimal platform for your needs.