"Versioning? Oh, I just always use the latest version - what could possibly go wrong?"

This very attitude is one of the reasons why some engineers get woken up by a phone call at 3 a.m. And why, the next morning, people stare awkwardly at their shoes during the daily stand-up.

Before it happens to you too, let’s briefly talk about this in the final part of our “Terraform @ Scale” series.

Read more: Terraform @ Scale - Part 7: Module Versioning (Best Practices)

"To hell with visibility - as long as it works!"

That is the mindset with which most platform engineering teams rush headlong into disaster. It is like cooking blindfolded in a strange kitchen: it works for a while, but when something burns, it really burns.

And nothing is worse than the look of helpless faces when everything is on fire.

In the previous two parts, dependency pain was our main topic. Today, let us take a look at what options we have to rescue a child that has already fallen into the well - alive, if possible.

Read more: Terraform @ Scale - Part 6c: Module Dependencies for Advanced Users (and Masochists)

In the previous article we examined the hidden complexity of nested modules and the ripple effect, and in doing so we increasingly realized what unpleasant consequences may arise from this in operational use and lifecycle management. One can open the floodgates to such problems by making beginner mistakes - above all the mistake of stuffing several or even all Terraform modules into a single Git repository. However, with a bit of seniority and clean planning, such problems can be minimized from the outset.

In this part, let us take a look at how one can deal with such dependencies in practice without the pain getting out of hand.

Read more: Terraform @ Scale - Part 6b: Practical handling of nested modules

When a single module update cripples 47 teams...

It is Monday morning, 10:30 a.m. The platform engineering team of a major managed cloud services provider has just released a "harmless" update to the base module for VM instances, from v1.3.2 to v1.4.0. The change? A new but mandatory freeform tag for cost center allocation of resources.

What nobody has on the radar: the senior engineer who once insisted vehemently, as a decision-maker, that all Terraform modules should be placed in a single Git repository. “Versioning is too much micromanagement. This way it is tidier. And it requires less work,” he said at the time.

The same Monday, 3:00 p.m.: 47 teams from different customers have so far reported that their CI/CD pipelines are failing. The reason? Their root modules reference the provider’s updated modules, but nobody has implemented the new parameters in their own root modules. The provider’s compliance check is active and rejects the Terraform runs due to missing mandatory tags. What was planned as an improvement has turned into an organization-wide standstill with massive external impact on hosting customers.

Welcome to the world of module dependencies in Terraform @ Scale.

Read more: Terraform @ Scale - Part 6a: Understanding and Managing Nested Modules

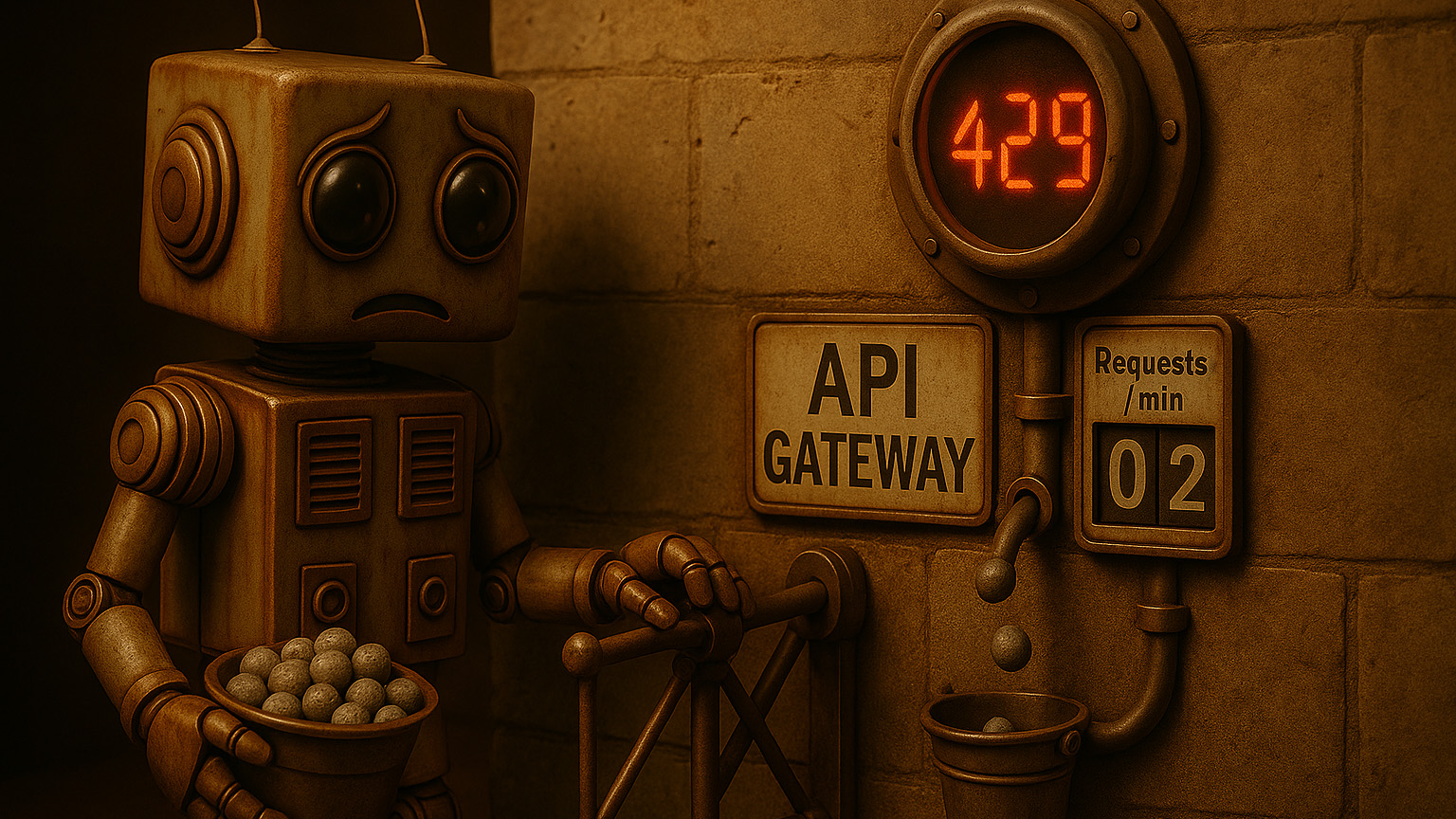

In the previous article 5a we saw how quickly large Terraform-rollouts hit API limits, for example when DR tests create hundreds of resources in parallel and 429 errors trigger retries like an avalanche. This continuation now picks up at that point and shows how you can use the API Gateway of Oracle Cloud Infrastructure and Amazon API Gateway to deliberately manage limits, ensure clean observability, and make them operationally robust via "Policy as Code".